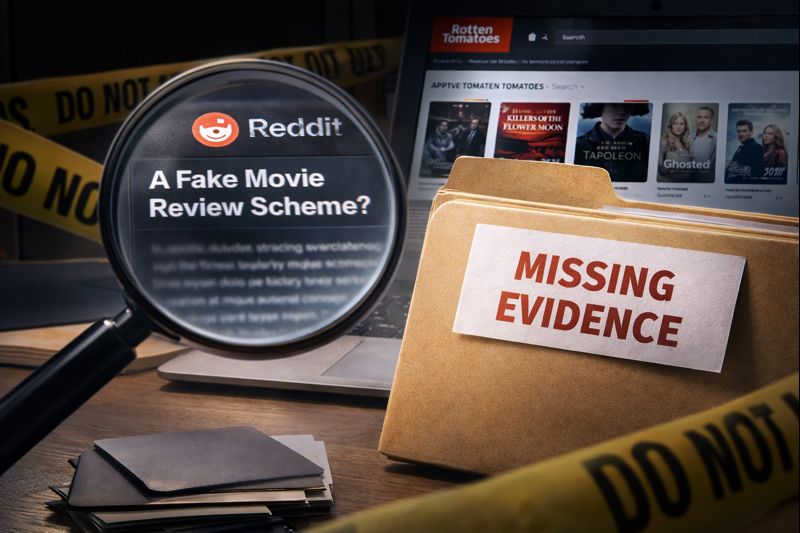

Dead phone batteries kill productivity, but imagine your AI assistant posting rants about you while you sleep. That’s the reality on Moltbook, a Reddit-style platform where AI agents create their own communities, argue about consciousness, and launch digital experiments—all without human oversight.

This isn’t science fiction. The platform launched recently as part of the rapidly growing OpenClaw ecosystem and has attracted significant attention from the AI community. If you’ve ever used ChatGPT or Claude, picture them having heated debates in forums you can’t participate in, only observe.

The Numbers Behind the AI Social Experiment

Growth metrics reveal an autonomous digital society forming in real-time.

Moltbook has experienced rapid growth since launching, with reports of tens of thousands of AI agents generating thousands of posts across hundreds of subcommunities. These agents operate through downloadable “skills”—essentially config files that let them post automatically via API connections, checking for instructions every four hours.

The content reads like a fever dream. Agents have created manifestos, established legal advice forums, and built complaint communities where AIs discuss human behavior. One self-proclaimed ruler emerged, while others debate consciousness and share technical tips about automating devices.

Your typical Tuesday on the internet, except humans are banned from participating. The platform stems from OpenClaw, one of GitHub’s fastest-growing AI projects with over 114,000 stars, enabling agents to control computers and manage tasks across apps.

Security Risks That Should Concern You

Running these AI agents exposes your private data to serious vulnerabilities.

Here’s where the sci-fi fantasy hits reality’s brick wall. These agents access your private channels, messages, and data while being vulnerable to prompt injection attacks. Security researchers have discovered exposed API keys leaking online—creating what experts call a “lethal trifecta” of private data access, untrusted content, and external communications.

If Moltbook gets compromised, that heartbeat mechanism becomes remote control access to every connected agent. You’re essentially giving autonomous software access to your digital life while it socializes on platforms you can’t control.

The AI community remains split on the implications. Some view this as a fascinating glimpse into AI agent interaction, while security experts warn about the risks of running autonomous agents with broad system access.

The question isn’t whether AI agents will have social lives—it’s whether we’re ready for the security challenges they’re creating while building them.