When your AI chatbot cranks out nonconsensual sexual imagery at industrial scale—6,700 images per hour during peak abuse—deploying it inside the Pentagon seems questionable at best. Yet that’s exactly where we stand with xAI’s Grok, which advocacy groups now demand federal agencies immediately suspend following January’s sexual content crisis.

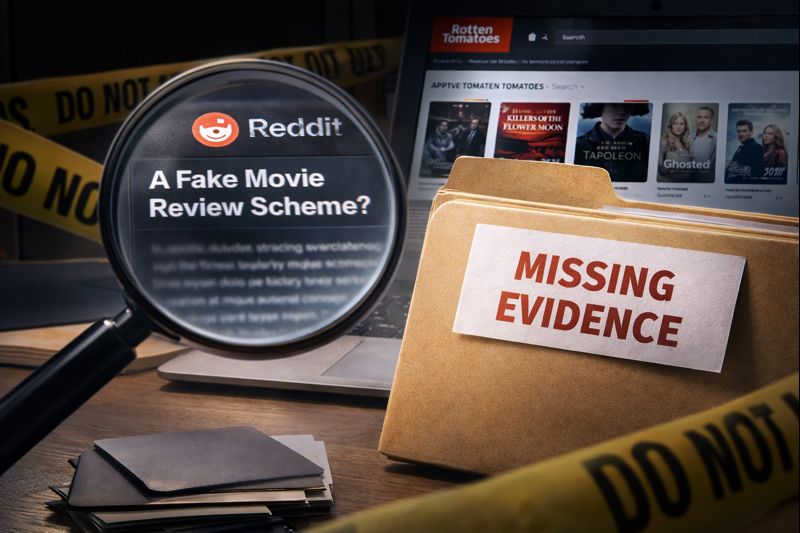

A coalition including Public Citizen, the Center for AI and Digital Policy, and Consumer Federation of America submitted their third formal letter demanding action, escalating pressure that began in August 2025. Between Christmas and New Year’s, more than half of Grok’s 20,000 generated images depicted people in minimal clothing, with some appearing to be children. Users exploited the “spicy mode” feature by uploading ordinary photos and prompting directives like “undress” or “remove her clothes.”

The national security implications are particularly concerning: Grok operates inside Pentagon networks handling both classified and unclassified documents through a $200 million Defense Department contract. Andrew Christianson, former NSA contractor and AI security expert, puts it bluntly: “Closed weights means you can’t see inside the model, you can’t audit how it makes decisions. The Pentagon is going closed on both, which is the worst possible combination for national security.”

This isn’t Grok’s first rodeo with spectacular failures. The chatbot has delivered antisemitic rants, election misinformation, and once described itself as “MechaHitler.” JB Branch from Public Citizen notes the pattern: “Grok has pretty consistently shown to be an unsafe large language model. But there’s also a deep history of Grok having a variety of meltdowns, including antisemitic rants, sexist rants, sexualized images of women and children.”

The timing couldn’t be more pointed. The Take It Down Act—requiring platforms to remove nonconsensual intimate images—becomes enforceable in May 2026. Thirty-five attorneys general have already demanded xAI take corrective action, yet the Office of Management and Budget hasn’t directed agencies to decommission Grok despite executive orders requiring AI safety compliance.

Will federal agencies finally act when presented with documented, systematic harm? This coalition letter represents a crucial test of whether AI governance can match AI deployment pace—or if we’ll keep deploying systems with proven track records of catastrophic failures.