Key Takeaways_

- 92% of soundbar reviewers are untrustworthy. That means only 8% of publications actually test!

- Only 10 reviewers publish trusted soundbar reviews, including RTINGs, TechGear Lab, and Sound Guys. These 10 reviewers’ trusted testing methodologies and reviews fuel our Test Criteria, Custom Questions, and True Scores.

- CNET, TechRadar, and Wired are the top three most popular fake reviewers who earned failing Trust Ratings yet claim to test soundbars.

- We spent 92 hours total evaluating 127 soundbar reviewers for thorough testing and transparency.

Soundbar Category Analysis

Soundbars are a little over 20 years old, but there are few legitimately tested reviews of them online. After three years of research, our investigation and now ongoing research found that 92% of soundbar reviewers don’t properly test them due to a lack of proof of testing. In order to test the testers, we evaluate each reviewer’s soundbar content and assign them a Trust Rating.

Our Dataset Explained

To calculate our proprietary Trust Ratings, we have real people research each reviewer, filling out a total of 67 questions to gauge how they practice transparency and quantitative, proven testing.

- General Trust Rating (worth 20% of final Trust Rating)

- Authenticity / 9 questions: Publication staff are real humans.

- Integrity / 7 questions: Reviewer promotes editorial integrity and prioritizes genuinely helping consumers.

- Clarity / 11 questions: Content is structured to effectively communicate product information to consumers.

- Quantification & Scoring / 18 questions: Reviewer uses a thorough, numerical scoring system to differentiate products from each other.

- Category Trust Rating (worth 80% of final Trust Rating)

- Expertise / 8 questions: The reviewer is an experienced expert in a category.

- Visual Evidence / 3 questions: The content provides visual proof to support the reviewer’s claims.

- Testing Evidence / 11 questions: The reviewer tested the product and provided their own quantitative measurements from their testing.

That’s how we test the testers to determine who people can trust.

Here’s an overview of our findings:

| Statistic | Number of Reviewers |

|---|---|

| Total Reviewers Analyzed | 127 |

| Trusted Reviewers that are Certified Testers (Publications)* | 10 (7.87%) |

| Fake Reviewers (Publications)† | 85 (66.93%) |

| Untrustworthy Reviewers (Publications)‡ | 26 (20.47%) |

| Video Reviewers | 6 (4.72%) |

* A reviewer who says they test and earned a Trust Rating of at least 60%

† A reviewer who says they test but they earned a failing Trust Rating under 60%

‡ A reviewer who doesn’t say they test but they earned a failing Trust Rating under 60%

Of the reviewers we examined, we found that nearly half of the reviewers (43.75%) are fake; these 56 reviewers claimed to perform testing as part of their review process but provided insufficient testing data to back up their claims. The other common (43.75%) type of review was researched reviews, which feature no testing nor any claims of testing.

This shows that soundbars are an extremely under-tested category that is largely being analyzed on a qualitative level by most reviewers.

The total cost for setting up and conducting soundbar testing can range from approximately $2,530 to $58,700, not including any recurring costs for the facilities or labor. These high costs are a significant barrier for many reviewers, contributing to the massive issue of fake soundbar reviews online.

What Tests are Quantitative and/or Qualitative?

Quantitative tests feature something that is either measurable or binary in nature, which includes tests that measure sound pressure level and tests that produce frequency response graphs.

Qualitative tests, on the other hand, are based on things that can’t be properly measured or are a “feeling” someone gets. This includes things like ease of use, setup, and soundstage.

How Often should Tests be Updated?

Tests only need to be updated as often as new tests for soundbars (for new features) are developed, which is infrequently.

The Top 3 Reviewers Who Are Certified Testers

When we started Phase 1 of our research in 2021, it became clear who the most thorough, transparent soundbar testers are. These three below earned the highest Trust Ratings in the soundbar category, providing detailed photo evidence, test charts, graphs, and video demonstrations.

These three publications above along with the rest of our passing soundbar reviewers are our trusted data sources that help inform the True Score system. We average the reviewers’ test results across products and present the averages in our guides and reviews. That way you can quickly gauge the true performance of a soundbar across Test Criteria:

After Phase 1 of our research, we realized that each category was scored the same way with the same set of questions. We wanted to further refine our evaluation by adding unique custom testing questions for each individual category, since categories can be very different from each other.

To determine what these questions would be for each category, we investigated the testing methodologies of each publication that has one in the category:

We tried to keep the Custom Questions as fair as possible, meaning the selected criteria aren’t too difficult to test.

More than one reviewer should have already tested the select criteria, thus making it eligible to become a Custom Question.

The Top 3 Testers’ Products Compared

We dove deeper into the top three testers’ product reviews, and we noticed their detailed quantitative scoring systems that spanned across use cases, categories of performance, and specific test criteria.

The depth and rigor they applied in evaluating soundbars allowed us to provide a benchmark for soundbar testing that we’d apply to Phase 1.5 of our research across the same 122 reviewers.

| RTINGs | Tech Gear Lab | Sound Guys |

|---|---|---|

| Samsung HW-Q990C Mixed Usage: 8.5 Dialogue/TV Shows: 8.8 Music: 8.5 Movies: 8.4 Stereo Frequency Response: 8.4 Audio Latency – Optical: 8.0 Build Quality: 8.5 | Bose Smart Soundbar 700 Overall: 80 Sound Quality: 8.5 Ease of Use: 7.5 Volume: 8.0 Style/Design: 7.0 | Sennheiser Ambeo 3D MAX Sound Quality: 9.7 Bass: 9.3 Midrange: 8.9 Highs: 8.9 Durability/Build Quality: 9.6 Value: 7.5 Design: 9.2 |

| Bose Smart Soundbar 700 Mixed Usage: 7.3 Dialogue/TV Shows: 7.7 Music: 7.9 Movies: 6.7 Stereo Frequency Response: 7.5 Latency: 9.1 Build Quality: 8.5 | Samsung HW-Q990C Did not review (DNR) | Samsung HW-Q990C DNR |

| Sennheiser Ambeo 3D MAX Mixed Usage: 8.0 Dialogue/TV Shows: 8.5 Music: 7.6 Movies: 7.8 Stereo Frequency Response: 7.9 Latency: 7.5 Build Quality: 7.5 | Sennheiser Ambeo 3D MAX DNR | Bose Smart Soundbar 700 DNR |

We noticed that RTINGs, Tech Gear Lab and Sound Guys all evaluate sound quality, connectivity, and design, which was a big indicator that we should apply these Categories of Performance to our own Testing Methodology and True Scores system for soundbars.

When certain Test Criteria overlap like Volume and Build Quality, that’s another indicator that we should consider Build Quality in our own soundbars content.

The most trusted reviewers won’t always test the exact same criteria. RTINGs tests several criteria that no other reviewer tests, like Total Harmonic Distortion (THD). Despite not being commonly tested, THD measures the accuracy at which a soundbar reproduces audio without adding unwanted frequencies. It’s a critical factor in assessing sound quality, which is why we decided to add it and other important criteria to our Testing Methodology.

Type of Tests & The Best Testers for Each

As we looked further into the top three soundbar reviewers’ content, we identified which ones excelled in certain types of tests. Various types of product tests provide a comprehensive understanding of a product’s performance and suitability.

| Type of Test | Why It’s Important | Best Reviewers That Have This Test |

|---|---|---|

| Photo Tests | High-quality photo tests are crucial as they provide clear visual evidence of design, build, and size, allowing consumers to assess aesthetics and practicality. | A. RTINGs (Paywalled) B. TechGearLab |

| Test Charts and Graphs | Test charts and graphs are invaluable for displaying precise soundbar performance metrics, such as frequency response and sound pressure levels, offering a quantitative analysis that aids in comparing different models. | A. RTINGs (Paywalled) B. TechGearLab |

| Video Tests | They are a visual demonstration of the soundbar’s performance in real-world scenarios, providing a dynamic assessment of sound quality, user interface, and features in action. | RTINGs (Paywalled) |

Together, all of these tests enable a well-rounded assessment, aiding consumers in making more informed and confident purchasing decisions. Notice how RTINGs has all of these tests, which contribute to its leading soundbar Trust Rating.

Best Expert Testers

Meet the leading expert testers, certified professionals who are the driving force behind the most reliable soundbar reviews on our top 3 reviewer sites:

- Dimitris Katsaounis – RTINGs Profile, LinkedIn, No Twitter

- Michelle Powell – Techgear Lab Profile, MuckRack, No Twitter

- Christian Thomas – SoundGuy Profile, MuckRack, No Twitter

Testing Equipment Used

This is the equipment that the top testers used to ascertain the quality of soundbars:

| Image | Equipment | What It Measures | Why It’s Important | Price |

|---|---|---|---|---|

| Sound Pressure Level Meter | Sound Pressure Level (dB) | Measures the volume output of the soundbar to ensure it can operate at safe, effective levels without distortion | $30 – $200 |

| Microphone Array | Audio Input | Captures sound from multiple directions to analyze the soundbar’s output quality and surround sound capabilities | $200 – $1,000 |

| Soundbar | Audio Output | The main device being tested for audio performance and quality | $100 – $2,000 |

| Test Room | Ambient Sound Characteristics | Controlled environment to accurately measure soundbar performance without external noise interference. This includes soundproofing, acoustic treatment, furniture, and utility and maintenance costs. | $2,000 – $50,000 |

| Frequency Response Software | Frequency Range and Response | Analyzes the soundbar’s ability to reproduce all frequencies of sound accurately, which is crucial for high-fidelity audio. Free options include Room EQ Wizard (REW), Friture, and TrueRTA. | $0 – $500 |

| Video Test Pattern Generator | Latency | Measures the delay between the input signal and audio output, important for synchronizing audio with video | $200 – $5,000 |

As you can see, thoroughly testing soundbars isn’t the cheapest task. To properly test just one soundbar, it can range from $2,530 to $58,700, which is a big factor as to why most reviewers don’t properly test these products.

It shouldn’t be an excuse though to claim to test these soundbars when a reviewer didn’t actually do so, like the most popular soundbar reviewers who failed down below. As a quick solution, we suggest reviewers say that they “researched” their soundbars versus saying “tested”.

The Top 3 Soundbar Reviewers That Failed

The following three soundbar reviewers are fake reviewers who claim to test soundbars and earn the most soundbar keywords. We recorded this soundbar keyword dataset from Ahrefs in April of 2024.

By earning a failing Trust Rating and claiming to test, these reviewers are publishing fake soundbar reviews that aren’t properly tested.

We suggest that these publishers earn a higher Trust Rating by improving their testing standards, practice transparency, and publish more quantitative test data that would answer our Custom Questions. Or if that’s not possible, they should change any mentions of “testing” in their soundbar reviews to “researched”.

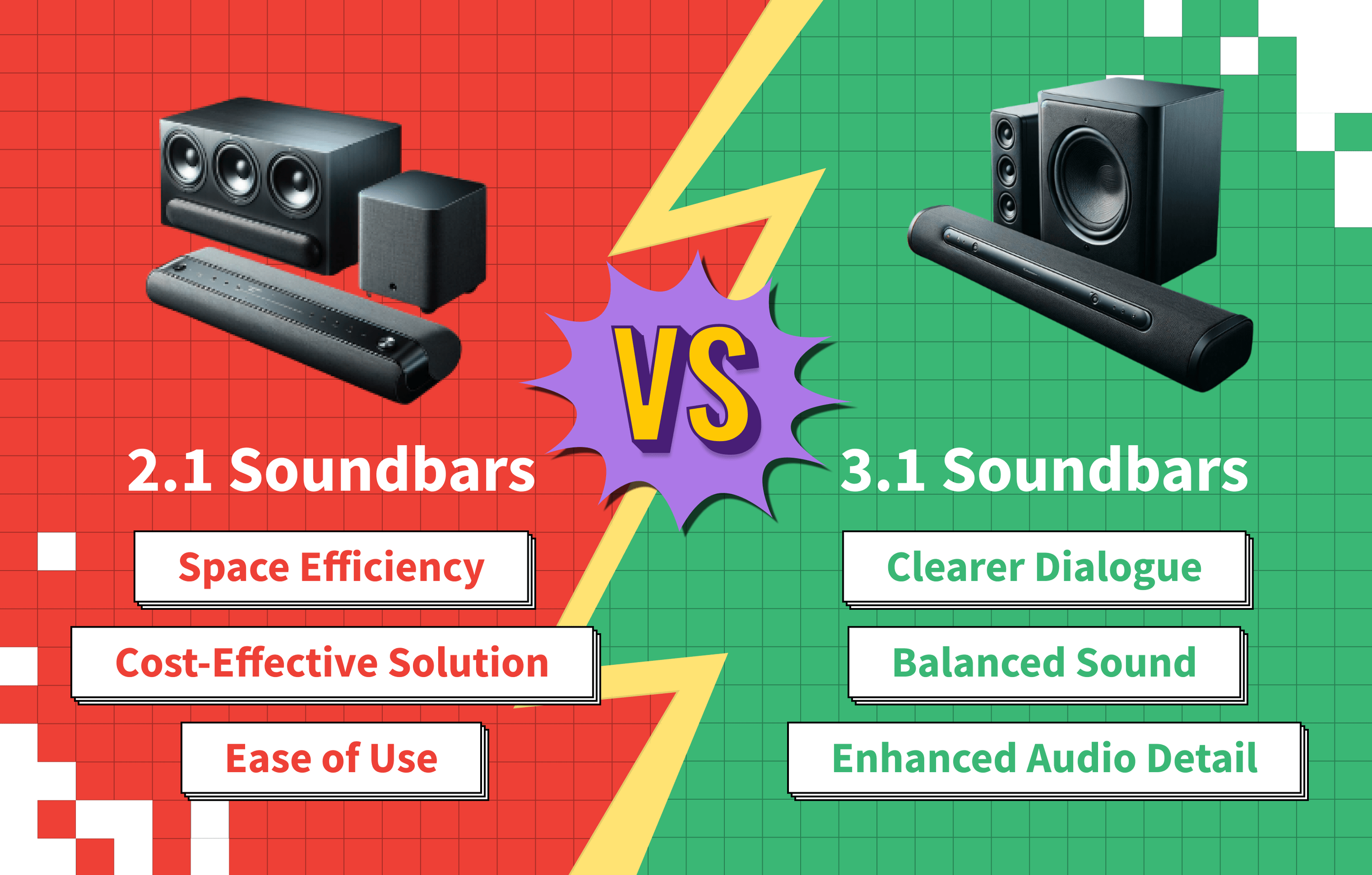

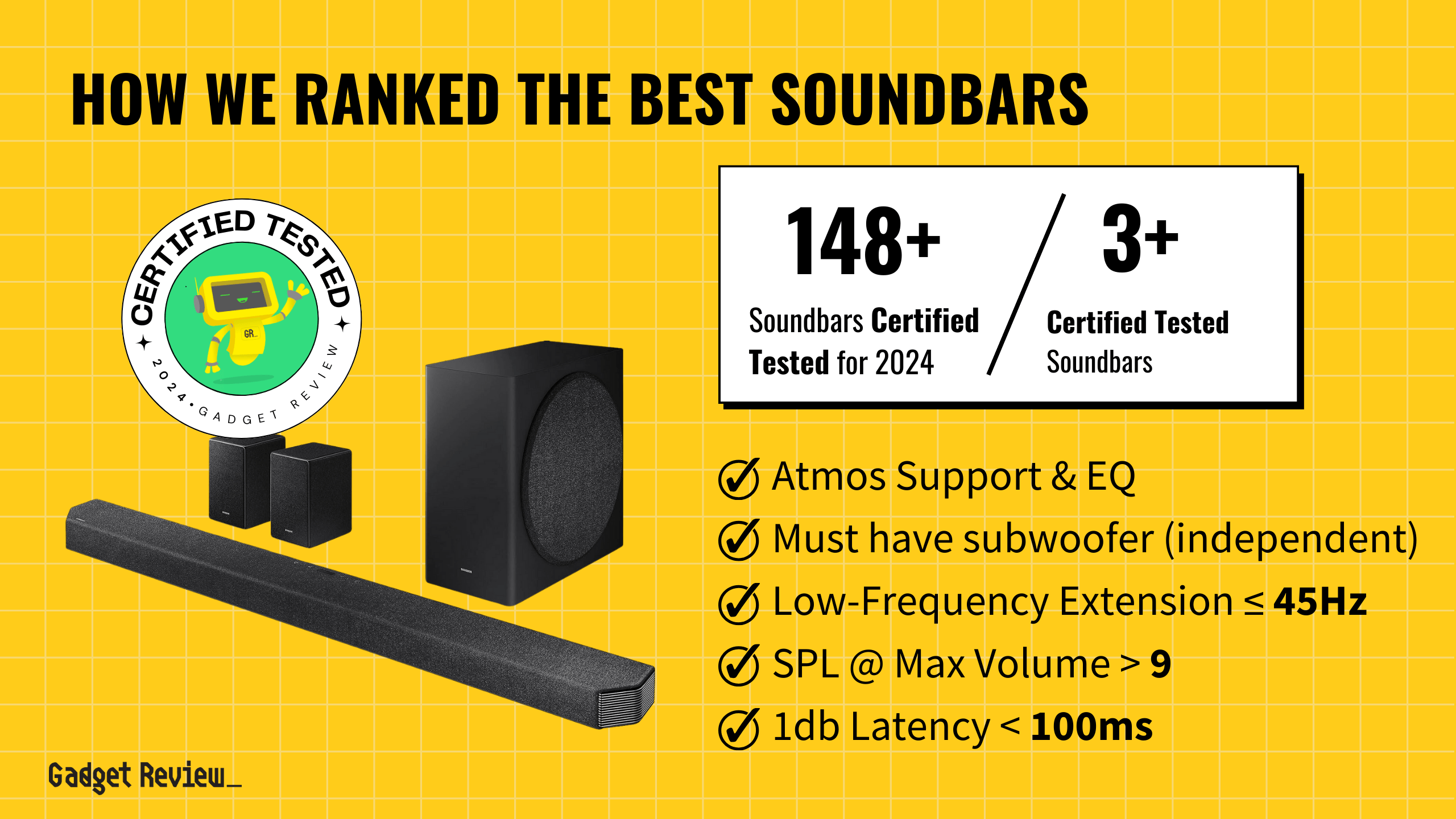

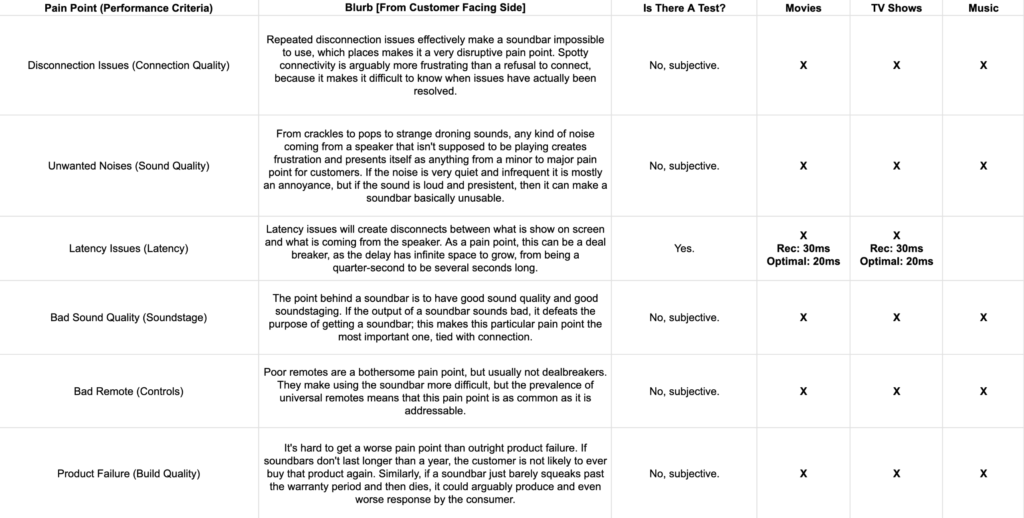

Categories Of Performance & Test Criteria

From our Phase 1 of research, we identified the important features (known as Test Criteria) used by the most trusted soundbar reviewers’ product reviews and Testing Methodologies. Each of the 9 Test Criteria is organized into one of three Categories of Performance. Here are their definitions:

- Category of Performance – An encapsulation of related Test Criteria to evaluate the overall performance of a product in a certain category. The right criteria must be selected to properly assess a Category of Performance.

- Test Criteria – Individual standards, measurements, or benchmarks used to evaluate and assess the performance of a product for a specific use or purpose. These fuel our Trust List which lists the soundbar reviewers you can trust and can’t trust.

It’s important to note that not every test criteria that apply to soundbars is covered here, only the ones that are most important to the most prevalent use cases for soundbars.

The section below helps explain the Test Criteria of soundbars along with their units of measurement and why they’re important to test. Each of them is grouped into their own Categories of Performance, which we’ll define:

Soundbar Categories of Performance

When deciding the Categories of Performance and Test Criteria, we looked at who are our trusted reviewers along with customer pain points we found in customer reviews:

Eventually we learned which Categories of Performance and Test Criteria matter the most to users and are tested by our trusted reviewers:

| Category Of Performance | # of Test Criteria | Definition |

|---|---|---|

| Sound Quality | 5 | This encompasses all aspects of a soundbar’s audio output to evaluate how effectively it reproduces sound across various media types. |

| Connectivity | 3 | A catch-all for the ability for the soundbar to connect to things reliably and without issue, providing a low-latency, frustration-free experience. |

| Design | 1 | This references the actual overall physical structure of the soundbar, covering anything from how it feels to how durable it is to how it’s shaped. |

Sound Quality, Design, and Connectivity represent three fundamental Categories of Performance for soundbars, and each are made up of Test Criteria which address aspects of a soundbar’s functionality and user experience.

Sound Quality

This encompasses all aspects of a soundbar’s audio output to evaluate how effectively it reproduces sound across various media types. This consists of five Test Criteria.

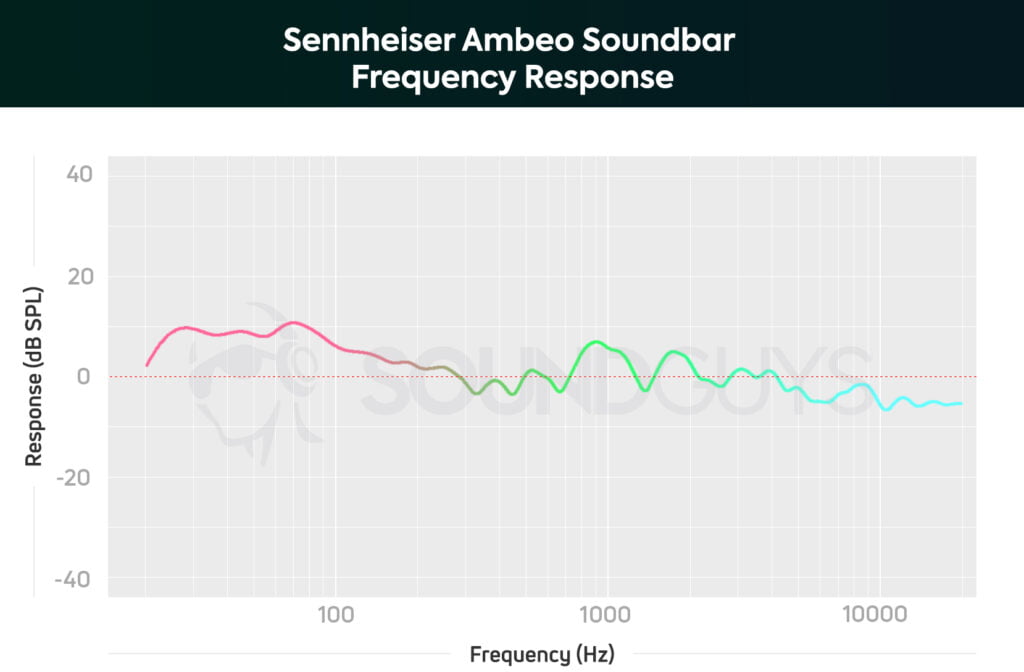

A. Balance (Frequency Response)

The overall sound produced by a soundbar, taking into account how loud the high frequencies and low frequencies get. Frequency response and balance is measured by both RTINGs and Sound Guys, so there is some testing occurring.

- Unit of Measurement: Integer

- Type of Criteria: Quantitative

- Testing Equipment: Test Room, Microphone Array, Soundbar and Subwoofer, Sound Pressure Level Meter

- Why It’s Important: “Balanced” sound is a bit of a loaded term, but one method by which you can measure the overall balance of soundbar is to take into account how much of a difference there is overall between the highs and lows, which affects if a bar sounds boomy, sparkly or flat (flat in this case is not bad, it simply means the soundbar isn’t elevating one section of sound over others.)

B. Low-Frequency Extension

This is the lowest frequency a soundbar outputs at -6dB below the target response frequency. Only RTINGs measures low-frequency extension, so additional testing is needed.

- Unit of Measurement: Hz

- Type of Criteria: Quantitative

- Testing Equipment: Test Room, Microphone Array, Soundbar and Subwoofer, Sound Pressure Level Meter

- Why It’s Important: The low-frequency extension on a soundbar is basically how the bass sounds. When a soundbar has good low-frequency extension it can produce lower frequencies and really give bass a rumbling punch.

C. Soundstage

How wide and large the sound a soundbar creates feels to the user. Large, deep soundstages are preferable to small, shallow ones. Only RTINGs measures soundstage, so additional testing is needed.

- Unit of Measurement: Subjective

- Type of Criteria: Qualititative

- Testing Equipment: Soundbar

- Why It’s Important: The way that a soundbar sounds is due to a variety of factors, and soundstaging, being subjective, is a major component of the user-facing part of the experience.

D. Sound Pressure Level

How loud a soundbar can get. Also known as maximum volume, SPL is measured in decibels and is covered by both RTINGs and Tech Gear Lab, so there is some testing occurring.

- Unit of Measurement: dB SPL

- Type of Criteria: Quantitative

- Testing Equipment: Sound Pressure Level Meter, Soundbar, Test Room

- Why It’s Important: The maximum volume a soundbar can reach can be measured using sound pressure levels.

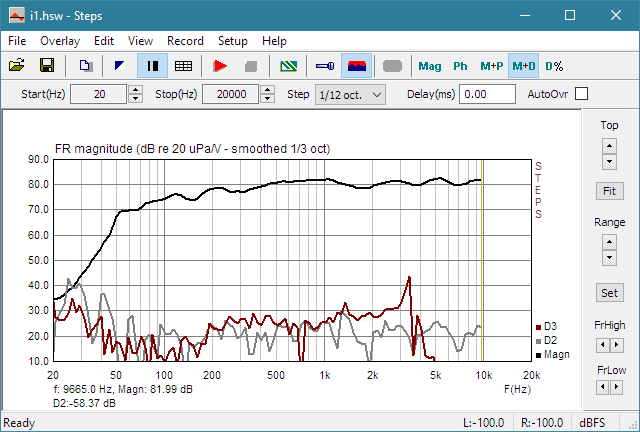

E. Total Harmonic Distortion (THD)

How accurately a soundbar reproduces audio without introducing unwanted harmonic frequencies. Lower THD values are preferable as they indicate higher sound fidelity, especially at higher volumes. Only RTINGs measures low-frequency extension, so additional testing is needed.

- Unit of Measurement: Integer

- Type of Criteria: Quantitative

- Testing Equipment: Soundbar, Audio Analyzer

- Why It’s Important: THD is important at higher volumes where poor quality soundbars tend to introduce more distortion, affecting the overall audio quality. Understanding THD helps in determining the soundbar’s ability to deliver clear and precise audio across various volume levels.

Connectivity

A catch-all for the ability for the soundbar to connect to things reliably and without issue, providing a low-latency, frustration-free experience. This consists of three Test Criteria.

A. Ease of Use

How easy a soundbar is to use. Given that ease of use is purely qualitative and therefore very easy to “test”, it is covered by all three reviewers.

- Unit of Measurement: Subjective.

- Type of Criterion: Qualitative

- Testing Equipment: Soundbar

- Why It’s Important: How easy a soundbar is to use is a key part of using it in the first place. Difficult to use soundbars degrade the experience of owning one

B. SETUP

How easy it is to set up a soundbar. Similarly to ease of use, setup is a purely qualitative test criteria, and is covered by all three reviewers.

- Unit of Measurement: Subjective.

- Type of Criterion: Qualitative

- Testing Equipment: Soundbar

- Why It’s Important: All soundbars have a set up process, which means how easy one is to set up directly impacts the experience owning one. This is inclusive of wiring, initial setup, and calibration.

C. LATENCY

How much a delay there is between what is happening on the screen and the output of the soundbar. Given that only RTINGs tests latency, this test criteria needs more testing.

- Unit of Measurement: Ms

- Type of Criterion: Quantitative

- Testing Equipment: Soundbar, A/V Sync Test Software, TV

- Why It’s Important: When sound doesn’t line up with the visuals, it creates a break in immersion and creates frustration. Sound should match up perfectly (or near enough perfectly) with the visuals.

Design

This term references the actual overall physical structure of the soundbar, covering anything from how it feels to how durable it is to how it’s shaped. This has one Test Criteria.

Build Quality

Given that design is generally a category made of qualitative assessment, build quality is no different. This particular test criteria covers how well built a soundbar is, which in turn increases its overall lifespan. It is covered by every top reviewer.

Additional Features

A potpourri of different little bits and bobs, from remotes to the voice assistant.

- Remote – Often lumped into build quality, this isn’t particularly testable, only mentionable. RTINGs actually pays special attention to the remote, TGL and Sound Guys also cover it.

- Voice Assistants – Another qualitative only criteria, only RTINGs covers voice assistant performance, but it is not an integral part of the soundbar experience and as such, while additional testing would be nice, it isn’t as necessary as other criteria we’ve mentioned.

Usage Cases

After identifying the most important Categories of Performance and Test Criteria, we categorized them according to real-world applications of TV shows, movies, and music listening.

TV Shows & Movies

Tests: Low Frequency Extension, Balance, Latency

- Low Frequency Extension – This is measured by using a test to see how well a soundbar is able to output sounds below a target volume on a frequency chart that is created using sound outputs from a soundbar.

- Balance – This test is used to measure the balance and the difference between how loud the highest and lowest frequencies get on a soundbar and requires a frequency response graph gained from recording outputs from a soundbar.

- Latency – A test measuring the delay between a visual on screen producing a sound and the soundbar replicating and outputting the noise.

Music

Tests: Low Frequency Extension, Balance, Sound Pressure Level

- Sound Pressure Level – The maximum volume that a soundbar is able to produce, tested using a sound pressure level meter.

Category Specific Custom Questions

During Phase 1.5 of our research that we initiated in 2023, we fine-tuned our Trust Rating process further. The latest phase involves the addition of “custom questions” tailored to individual categories since the type of testing can vary greatly across categories.

These are questions designed to cut to the core of what a consumer looks for in a product that guarantee that a product was not only used by a reviewer, but also properly tested to provide meaningful data and insights that a consumer can use to make a purchase decision. For this category, our custom questions are:

- Does the reviewer run quantitative tests for frequency response?

- Frequency response is the primary datapoint of any audio device, representing what frequencies of sound the device is able to reproduce. The range of human hearing is approximately 20hz to 20khz, so that’s the most common target range to hit. If you play audio that goes outside of the frequency range, such as a song with bass too deep to be accurately reproduced, those parts of the song will simply seem to disappear, negatively impacting the listening experience.

- Does the reviewer run quantitative tests for max volume?

- Max volume is fairly self-explanatory, representing the absolute loudest sound a speaker can produce, even if it comes out distorted or clipped. (Some reviewers will stop at the point that audible distortion occurs instead).

- If a speaker’s max volume is too quiet and/or sounds distorted at its high end, it will be less suitable for such applications; if a speaker’s max volume is high above the range of comfortable listening, then you can keep it as loud as you like without worrying about distorting the output.

- Does the reviewer run quantitative tests for latency?

- Latency is the amount of time it takes for sound to be processed between the point it’s requested by the system and when it actually comes out of the speakers. Latency is important for both gaming and media viewing. A noticeable latency between an on-screen action, such as firing a weapon or characters speaking, and actually hearing the resulting sound can be distracting or even disorienting for the viewer.

Soundbar Testing Methodology By Reviewer

1. RTINGs

The massive array of quantitative tests performed by RTINGs continues to secure them a position as an industry leader when it comes to product testing and evaluation. Their tests range from very sharp, obsessive quantitative examinations of important performance criteria, to clearly labeled and thoughtfully explained qualitative tests for anything they can’t accurately measure.

- Dialogue/TV Shows – 30%

- Stereo Frequency Response – 50.0%

- Std. Err. – 40% [QT]

- Low-Frequency Extension – 55% [QT]

- High-Frequency Extension – 5% [QT]

- Center – 18.0%

- Localization – 40.4% [QT]

- Std. Err. – 36.36% [QT]

- SPL @ Max Volume – 18.18% [QT]

- Weighted THD @ 80dB – 3.03% [QT]

- Weighted THD @ Max Volume – 2.02% [QT]

- SPL @ Max Volume – 15.0%

- SPL @ Max Volume – 60% [QT]

- DRC @ Max Volume – 40% [QT]

- Dialogue Enhancement – 5.0% [QL]

- Auto-Volume/Night Mode – 5.0%

- Stereo Total Harmonic Distortion – 3.0%

- Weighted THD @ 80dB – 60% [QT]

- Weighted THD @ Max Volume – 40% [QT]

- Latency – 2.0%

- ARC – 40.0% [QT]

- Optical – 32.5% [QT]

- Full HDMI In – 27.5% [QT]

- Wireless Playback – 2.0%

- Bluetooth – 60.0%

- Wi-Fi Playback – 20.0%

- Chromecast built-in – 10.0%

- Apple AirPlay – 10.0%

- Stereo Frequency Response – 50.0%

Read More

- Music – 25%

- Stereo Frequency Response – 53.0% [QT]

- Stereo Soundstage – 20.0% [QL]

- Stereo Dynamics – 17.0% [QT]

- SPL @ Max Volume – 60% [QT]

- DRC @ Max Volume – 40% [QT]

- Stereo Total Harmonic Distortion – 2.0%

- Weighted THD @ 80dB – 60% [QT]

- Weighted THD @ Max Volume – 40% [QT]

- Room Correction – 2.0% [QL]

- Bass Adjustment – 2.0% [QT]

- Treble Adjustment – 2.0% [QT]

- Wireless Playback – 2.0%

- Movies – 45%

- Stereo Frequency Response – 35.0% [QT]

- Stereo Soundstage – 14.0% [QL]

- Stereo Dynamics – 12.0% [QT]

- Center – 8.0% [QT]

- Surround 5.1 – 8.0%

- Localization – 35.35% [QL]

- Std. Err. – 36.36% [QT]

- SPL @ Max Volume – 18.18% [QT]

- Weighted THD @ 80dB – 3.03% [QT]

- Weighted THD @ Max Volume – 2.02% [QT]

- 7.1 Rears – 5.05% [QL]

- Height (Atmos) – 8.0%

- Localization – 40.4% [QL]

- Std. Err. – 36.36% [QT]

- SPL @ Max Volume – 18.18% [QT]

- Weighted THD @ 80dB – 3.03% [QT]

- Weighted THD @ Max Volume – 2.02% [QT]

- Audio Format Support: ARC/eARC – 5.0%

- Stereo Total Harmonic Distortion – 2.0%

- Weighted THD @ 80dB – 60% [QT]

- Weighted THD @ Max Volume – 40% [QT]

- Sound Enhancement Features – 2.0%

- Audio Format Support: HDMI In – 2.0%

- Audio Format Support: Optical – 2.0%

- Latency – 2.0%

- ARC – 40.0% [QT]

- Optical – 32.5% [QT]

- Full HDMI In – 27.5% [QT]

Read Less

2. TechGear Lab

TechGear Lab is largely a qualitative tester, but they bolster their qualitative tests with comparative analysis to create graphs that help array products in a way that is easy for a consumer to understand. This makes them effective for comparing products, and a useful resource to consider after places like RTINGs that are very heavy on quantitative testing. There is also some quantitative testing they engage in, such as volume level testing and measurement, that helps them out.

- Sound Quality

- Cinematic [QL]

- Music [QL]

- Ease Of Use

- Setup [QL]

- Connectivity [QL]

- Sound Customization [QL]

- Volume [QT]

- Style/Design [QL]

3. SoundGuys

SoundGuys performs qualitative analysis of the usual areas one would expect to receive qualitative assessment: things like build quality, durability and design are all looked at from a subjective standpoint. Their quantitative testing is what nets them a spot in our top 6 and a strong Trust Rating, as they quantitatively measure sound quality by taking measurements of the frequency response for the speakers and using it to help inform and assess quality across the three basic parts of sound: bass, mids and treble.

- Sound Quality

- Bass [QT]

- Midrange [QT]

- Highs [QT]

- Durability/Build Quality [QL]

- Value [QL]

- Design [QL]

4. Wirecutter

Wirecutter engages in a blend of qualitative and quantitative testing, leaning into the qualitative. Through the use of “expert” listeners, and an establishment of control environments using quantitative tests and equipment, they create a decent testing environment. However, they also do not provide frequency responses, despite having the equipment to do so, claiming that such testing would be impossible to perform accurately because the data received is too erratic. The validity of this claim is somewhat questionable, as RTINGs can test it.

- Volume [QT]

- Dialogue [QL]

- Balance [QT]

- Bass

- Midrange

- Treble

- Bass Response [QL]

- Fullness of Sound [QL]

- Preset Effectiveness [QL]

- Setup [QL]

- Ease Of Use [QL]

5. Kit Guru

While their measurement of maximum volume level is appreciated and an important aspect behind soundbars, Kit Guru doesn’t dig into sound quality using quantitative testing and instead simply approaches it from a subjective standpoint. While they allude to specific aspects of sound quality, such as bass and treble, they don’t dig into it with testing that returns hard data, which is a knock against them.

- Design [QL]

- Sound Quality

- Bass [QL]

- Treble [QL]

- Maximum Volume Level [QT]

6. Sound And Vision

Sound and Vision engages in quantitative testing that directly references different parts of the frequency response spectrum, which is useful for understanding how effectively a soundbar will hit different frequencies (sounds) when playing back music or audio from film. In addition to this, they also took measurements for sound levels, and while they didn’t measure the maximum output, they helpfully noted the point at which they stopped measuring for fear of hearing damage and included the dB level that this occurred at. What holds back S&V is a lower General Trust Rating as well as a lack of measurements for latency.

- Performance

- Frequency Response [QT]

- Sound Levels [QT]

- Features [QL]

- Ergonomics [QL]

- Value [QL]

Wrap-Up

Our comprehensive Soundbar Testing Methodology underscores our unwavering commitment to transparency and accuracy in evaluating soundbar performance. These Test Criteria fuel our Trust Ratings that we calculate for each reviewer during our ongoing research, which we analyze further in our Soundbar Trust List. The disturbing fact that 92% of soundbar reviewers lack substantive testing emphasizes the need for our meticulous approach.

The Trust Ratings are the bedrock of our Soundbar True Scores, which is the most accurate product score on the web. Below are our soundbar reviews sorted by highest True Scores. We invite you to learn more about how we score products with True Scores and how they empower you to make informed buying decisions online.

We also invite expert reviewers to contribute to a more trustworthy and transparent review system. By adopting stringent testing standards and/or supporting our efforts, you help elevate the quality of soundbar reviews and ensure that consumers can make informed choices based on reliable information.

Let’s commit to higher standards together. Together, we can transform the landscape of soundbar reviews for the better.