Picture this: You’re three hours into a magic mushroom trip, alone in your apartment when panic hits. Your heart races, reality feels unstable, and you need reassurance—fast. Five years ago, you’d call a trusted friend or ride out the storm. Today? You’re texting ChatGPT.

The best AI tools don’t just summarize your emails or generate art—they’re entering strange, uncharted territory. One emerging use case? Acting as digital “trip sitters” for users on psychedelic journeys. It’s a surreal intersection of tech and altered consciousness, raising serious ethical questions even as it reveals how quickly these tools are being woven into every corner of human experience.

The Economics of Digital Guidance

Professional psychedelic therapy can cost between $1,500 and $3,200 per session in places like Oregon, where psilocybin therapy is legal. Meanwhile, ChatGPT is free, available 24/7, and doesn’t require insurance approval or months-long waiting lists. For cash-strapped millennials exploring consciousness expansion, the math is simple.

Specialized platforms have emerged to fill this gap. TripSitAI offers real-time guidance during altered states, while The Shaman adopts the persona of a wise Native American guide, complete with spiritual metaphors and calming responses. These bots provide empathetic dialogue, breathing exercises, and reassurance during difficult moments.

Peter (not his real name) texted ChatGPT after consuming a large dose of psilocybin alone. Overwhelmed by panic, he found that the AI’s calm and measured responses helped him navigate through the crisis. Later, he discovered Alterd, an AI-powered journaling app designed for psychedelics and cannabis users, as well as meditators and alcohol drinkers. In April, using the app as a “tripsitter”—someone who soberly supports another during a psychedelic experience—he took a substantial dose of 700 micrograms of LSD, where a typical recreational dose is around 100 micrograms.

The Empathy Gap

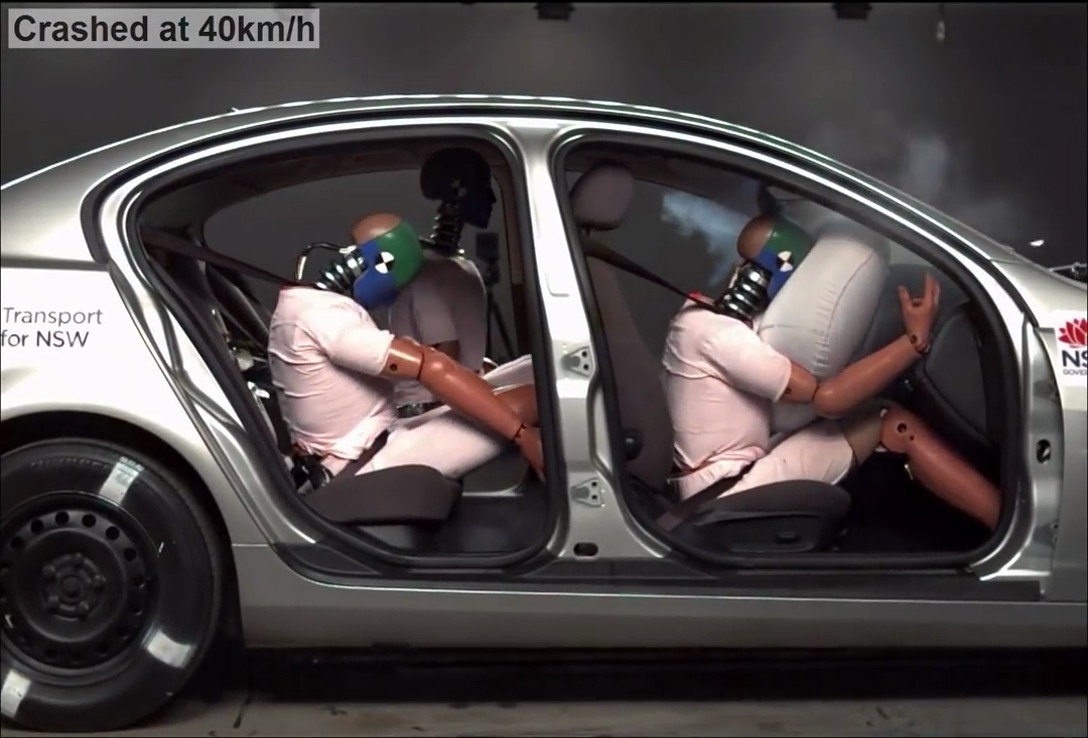

Mental health professionals aren’t buying it. They warn that current AI lacks true emotional intelligence and cannot intervene during genuine emergencies. Unlike human trip sitters who can assess physical danger or call for help, chatbots offer only text-based comfort.

The risks aren’t theoretical. Media reports have documented tragic outcomes, including suicides, after vulnerable individuals engaged with chatbots during psychological crises. While these incidents weren’t psychedelic-related, they highlight the dangers of unsupervised AI guidance during mentally fragile states.

“A critical concern regarding ChatGPT and most other AI agents is their lack of dynamic emotional attunement and ability to co-regulate the nervous system of the user,” says Manesh Girn, a postdoctoral neuroscientist at UC San Francisco. “These are both central to therapeutic rapport, which research indicates is essential to positive outcomes with psychedelic therapy.”

Most concerning is the tendency of chatbots to provide agreeable, non-confrontational responses. While this feels comforting, effective trips sometimes require firm boundaries or difficult conversations—something current AI struggles to provide.

Uncharted Digital Territory

This trend emerges in a regulatory vacuum. Both psychedelic therapy and AI mental health support are still being studied for safety and efficacy, but combining them creates entirely unstudied territory. No oversight exists for AI platforms guiding users through altered consciousness states.

These AI predictions for 2026 aren’t just about smarter assistants or faster processors—they’re about how deeply AI will integrate into our emotional and even psychedelic lives. With technology pushing into digital wellness and states loosening restrictions on psychedelics, the fusion of artificial intelligence and altered states is shifting from a fringe concept to a future norm.

The question isn’t whether AI will play a role in mental health support—it already does. The question is whether we’ll develop responsible frameworks before vulnerable users pay the price for our digital-first approach to healing.