Burning through cloud computing credits while debugging AI models gets expensive fast. Nvidia’s DGX Spark aims to solve that problem by cramming supercomputing power into a desktop box smaller than most gaming rigs. Starting October 15th, you can order this “personal AI supercomputer” for $3,999 and run massive language models from your desk instead of begging for datacenter access. For comparison, Apple’s Mac Studio with M3 Ultra costs as much as $3,599.99 in some configurations. You get up to 512 GB of unified memory, but its raw FLOPs for AI workloads might lag specialized AI hardware (especially in operations / tensor cores) compared to what Nvidia does with dedicated AI accelerators.

Unified Memory Architecture Changes Everything

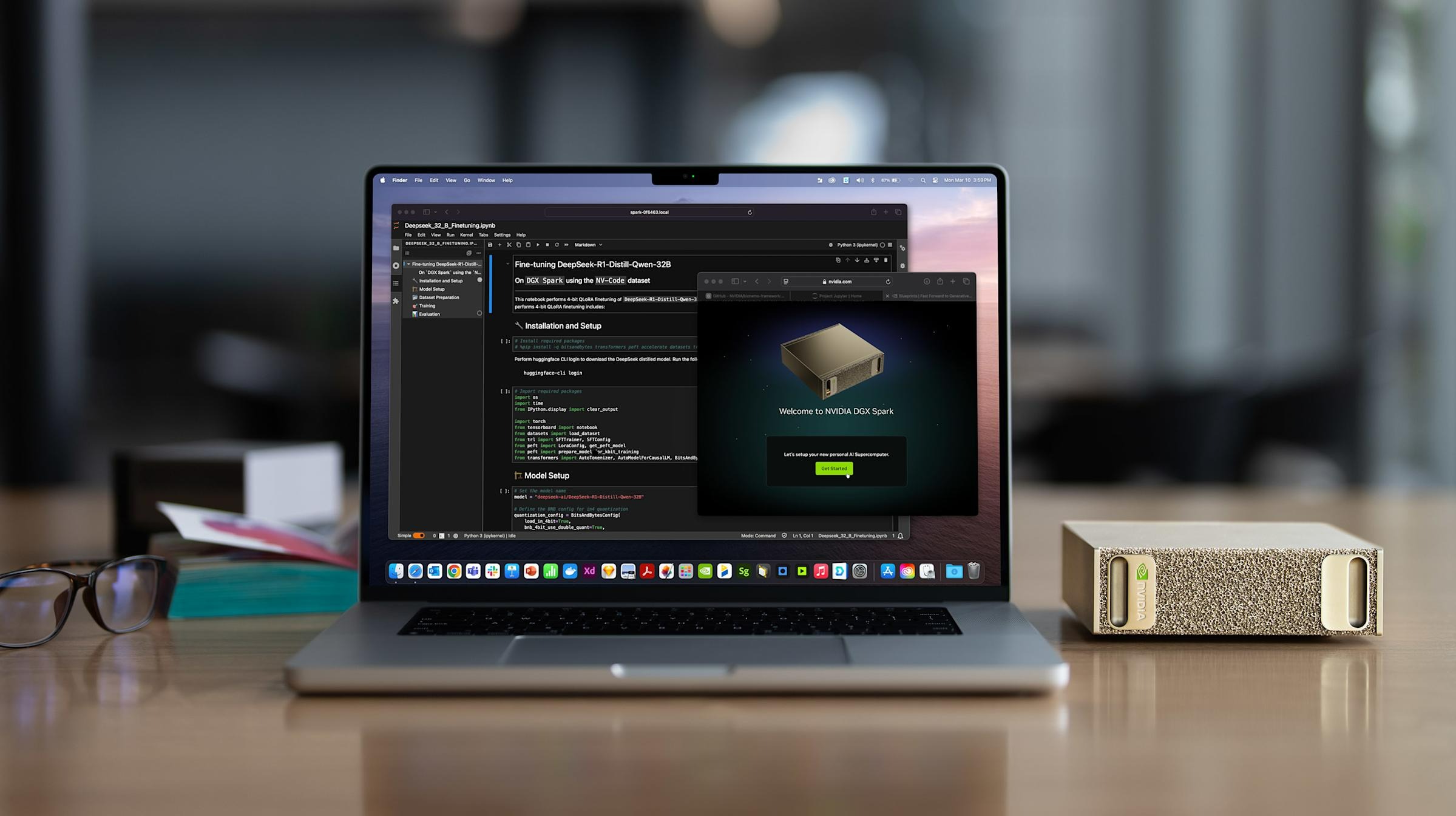

The DGX Spark packs Nvidia’s GB10 Grace Blackwell Superchip—a 20-core Arm processor married to a Blackwell GPU sharing 128GB of LPDDR5x memory in a single address space. This unified architecture means no more shuffling data between system RAM and VRAM when working with large models.

You can load and run models up to 200 billion parameters locally, capability that previously required expensive cloud instances or multi-GPU server rigs. Performance hits around 1 petaflop for AI inference, with impressive batched throughput on models like Llama 3.1 8B reaching roughly 8,000 tokens per second. The compact 150mm cube weighs just 1.2kg and sips 240W—less power than most gaming desktops while delivering genuine AI workstation capabilities.

Reality Check on Performance Expectations

The LPDDR5x memory tops out at 273 GB/s bandwidth, creating a bottleneck that high-end discrete GPUs easily surpass for dense computational workloads. Think of DGX Spark like a well-equipped food truck versus a full restaurant kitchen—perfect for rapid iteration and specialized tasks, but not designed for maximum throughput.

Local development scenarios requiring large memory capacity over peak performance showcase where DGX Spark shines. Fine-tuning models, running private inference workloads, or handling compliance-friendly local processing delivers results traditional GPU setups can’t match. Educational institutions and startups needing flexible AI R&D capabilities without ongoing cloud costs will find genuine value here.

Desktop Convenience Meets Enterprise Capability

Acer, Asus, Dell, HP, Lenovo, and MSI will offer their own DGX Spark variants at similar $3,999 price points. Each system ships with DGX OS and Nvidia’s complete AI software stack, including seamless workflow portability to DGX Cloud for scaling up projects. The ConnectX-7 networking enables clustering multiple units for distributed workloads—connecting two DGX Spark systems supports models up to 405 billion parameters.

For AI researchers tired of rationing cloud resources or data scientists needing consistent local development environments, DGX Spark represents democratization of serious AI hardware. At $3,999, it costs less than a year of heavy cloud usage while providing unlimited local experimentation. Just don’t expect it to replace dedicated training clusters—this brings AI development back to your desk, not your datacenter.