Microsoft’s laboratory tests show a staggering 65% reduction in maximum GPU temperature rise when coolant flows directly through microscopic channels etched into the silicon itself. This isn’t just incremental improvement—it’s thermal engineering that eliminates the layers separating coolant from the hottest parts of your data center’s most power-hungry chips.

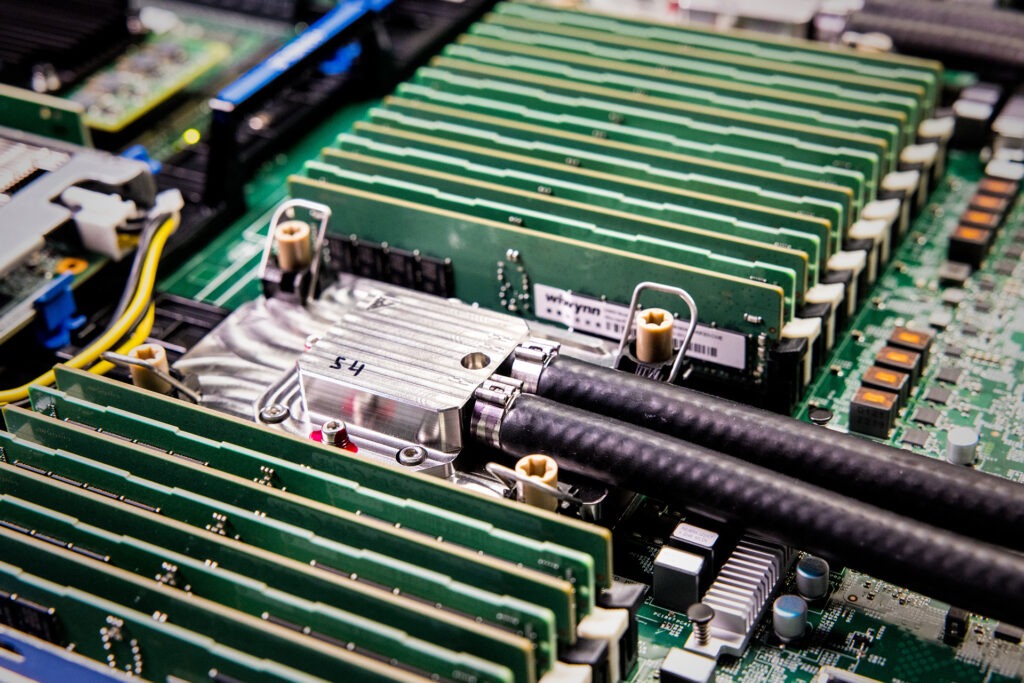

Current cold plate cooling systems keep liquid outside the silicon, creating thermal barriers that waste precious heat removal capacity. Microsoft’s microfluidic approach carves channels as narrow as human hair directly into the chip substrate, using AI-driven heat mapping to route coolant precisely where temperatures spike.

These microscopic highways target dynamic hot spots with surgical precision, eliminating the guesswork that plagues traditional cooling. Working with Swiss startup Corintis, Microsoft borrowed designs from nature—think leaf vein patterns that evolved over millions of years to distribute resources efficiently.

This bio-inspired layout delivers up to 3x better cooling performance than conventional methods, enabling computational densities that would otherwise melt your hardware. The result? Data centers can pack more processing power into smaller footprints without thermal throttling.

Racing to support increasingly power-hungry AI workloads, data center operators face a brutal reality: traditional cooling hits physical limits just as demand explodes. Microsoft’s innovation allows warmer coolant temperatures, reducing the energy spent chilling fluids while supporting 3D-stacked chip architectures that current methods can’t handle. This aligns with the company’s $30 billion infrastructure investment in custom silicon and sustainable data center designs.

Manufacturing these cooling veins poses serious engineering challenges. Etching channels without compromising structural integrity or creating leaks requires precision that makes smartphone fabrication look simple. Microsoft validated prototypes on existing Intel silicon, but scaling from laboratory success to production-ready chips remains the critical hurdle.

The implications stretch beyond better cooling. This breakthrough removes thermal constraints that currently limit chip designers, potentially unlocking processor architectures that today’s cooling systems simply can’t support. Future AI applications may depend on innovations happening at the microscopic level of silicon itself.