History’s biggest disasters often trace back to a simple truth: someone made a call that seemed reasonable at the time. These aren’t stories about natural catastrophes or acts of war—they’re about ordinary people making extraordinary mistakes that rippled across continents. From flawed safety tests to ignored warnings, these human errors changed everything.

18. Grenfell Tower Fire

A faulty refrigerator sparked the blaze on June 14, 2017, but flammable cladding turned a West London high-rise into a death trap. Seventy-two people died when flames raced up the building’s exterior, fed by materials that never should have passed safety checks. Residents were told to “stay put” by emergency services following standard protocol for fireproof buildings—except Grenfell Tower wasn’t actually fireproof. Warnings about the cladding had been issued and ignored. The building’s cavity barriers were inadequate. Every safety measure that could fail did, and the people inside paid for decisions made in boardrooms they’d never seen.

17. Chernobyl

Reactor No. 4 wasn’t supposed to explode. Operators at Chernobyl on April 26, 1986, were running a routine safety test when they disabled key protection systems and removed too many control rods. The reactor entered an unstable state, then a rapid power surge triggered a steam explosion and graphite fire that released radioactive material across Europe. Authorities waited 36 hours before telling nearby residents to evacuate, leading to widespread exposure and contamination. Local agriculture was banned for decades. The surrounding area remains uninhabitable, a monument to what happens when protocol gets tossed aside for convenience.

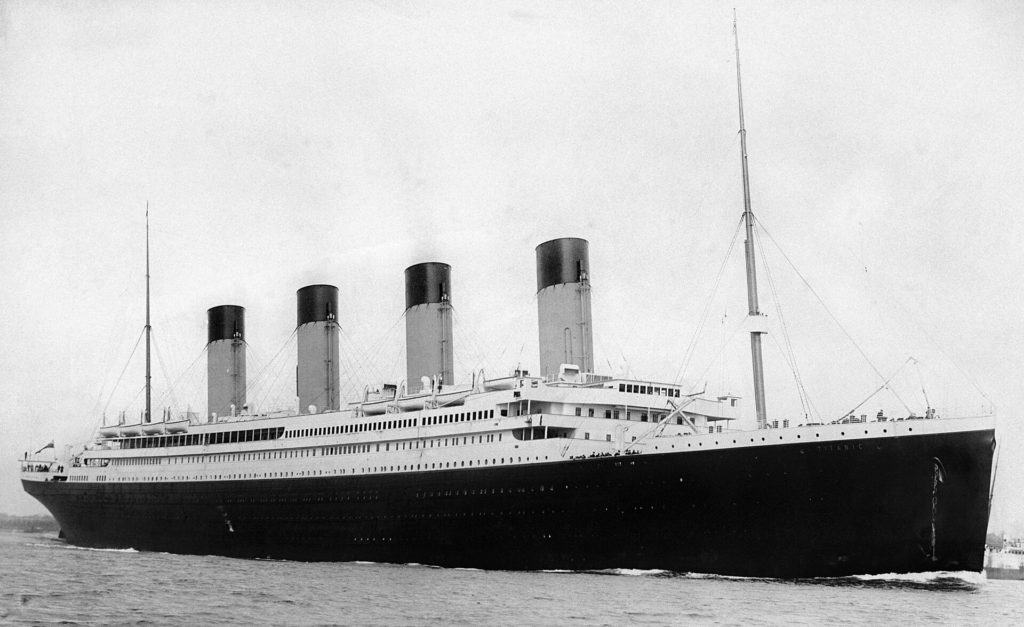

16. The Titanic

Captain Edward Smith kept the Titanic at near-maximum speed despite ice warnings because he believed the ship’s design made it unsinkable. That confidence proved expensive. When lookouts finally spotted the iceberg, First Officer William Murdoch ordered “hard-a-starboard” and “full astern”—a combination that reduced the ship’s turning ability and guaranteed a collision. The 1912 disaster wasn’t just about hitting ice. It was about arrogance meeting reality at 22 knots. Lifeboats that could have saved more lives sat half-empty because crew didn’t believe they’d actually need them. Over 1,500 people died because someone thought the rules didn’t apply.

15. The Exxon Valdez Oil Spill

Captain Joseph Hazelwood left the bridge in 1989, handing control to an unlicensed third mate who promptly ran the Exxon Valdez aground on Bligh Reef. Eleven million gallons of crude oil spilled into Prince William Sound, coating 1,300 miles of Alaska coastline. Hazelwood had been drinking. The crew was fatigued. Radar equipment that could have prevented the grounding wasn’t functioning, likely because someone decided maintenance could wait. The spill devastated local fishing communities and wildlife populations that still haven’t fully recovered. Sometimes saving a few dollars on equipment checks costs billions in cleanup and reputation.

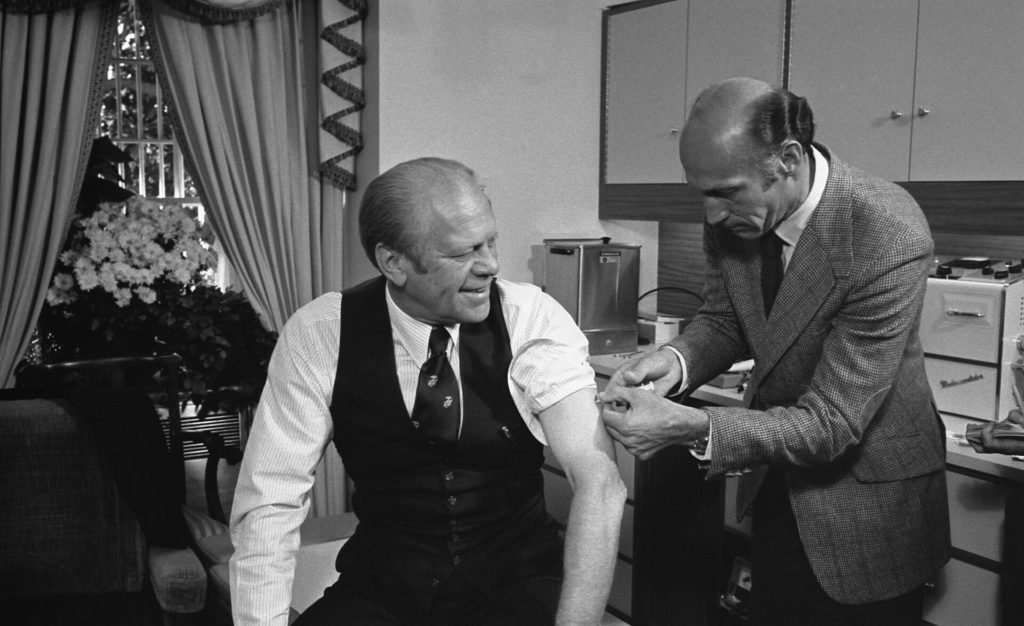

14. 1976 Swine Flu Vaccination Campaign

Health officials panicked after a single swine flu outbreak at Fort Dix and rushed a vaccine to market, fearing a pandemic like 1918. What they got instead was a public health disaster that eroded trust for years. The 1976 vaccination campaign was halted when more people developed Guillain-Barré syndrome from the vaccine than died from the flu itself. Turns out the pandemic they feared never materialized, but the damage to vaccination programs did. Hundreds suffered paralysis from a shot meant to protect them. Good intentions paired with bad judgment created a textbook case of overreaction with lasting consequences.

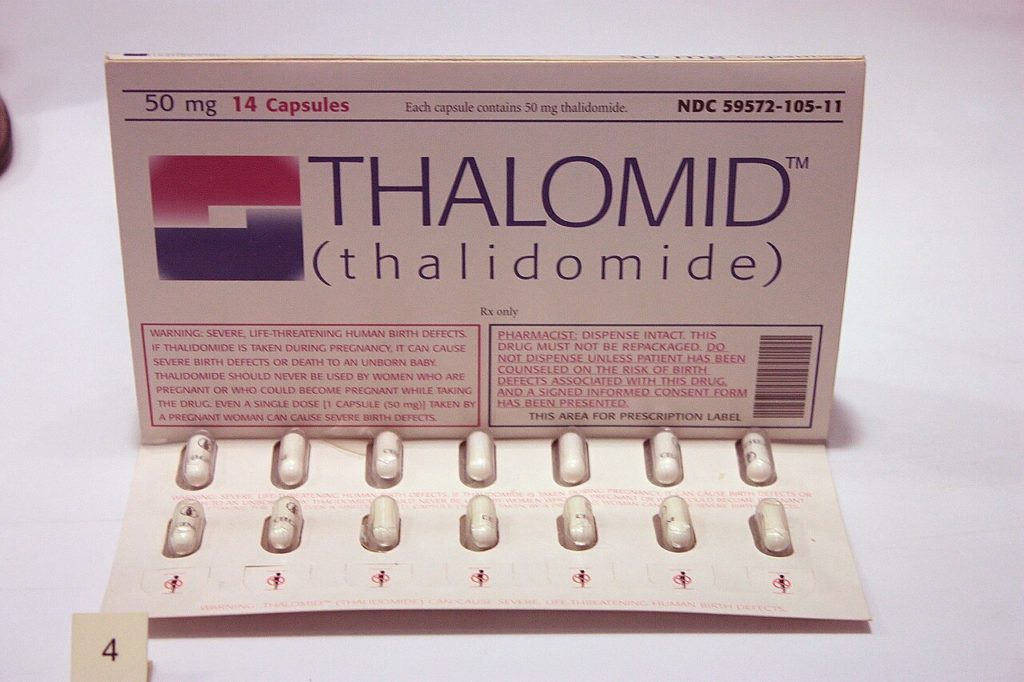

13. Thalidomide

Marketing a sedative as safe for pregnant women without adequate testing seemed like good business in the late 1950s—until over 10,000 children worldwide were born with severe limb deformities. Thalidomide became the cautionary tale that fundamentally changed drug approval processes. Pharmaceutical companies had assumed that substances safe for adults would be safe for developing fetuses, a guess that proved catastrophically wrong. By the time the drug was withdrawn in the early 1960s, an entire generation had paid the price for shortcuts in testing protocols. Modern FDA requirements exist because someone once thought clinical trials were optional.

12. The Housing Market Crash of ’08

Banks packaged risky subprime mortgages into investment products, then convinced rating agencies to call them safe. Moody’s and Standard & Poor’s slapped AAA ratings on securities that were essentially junk bonds dressed in fancy labels. When housing prices fell, the entire system collapsed like a house of cards built on a trampoline. Government bailouts couldn’t prevent the global recession that followed. Millions lost homes, jobs, and retirement savings because financial institutions prioritized short-term profits over basic risk assessment. The 2008 crisis proved that when everyone’s incentivized to lie about danger, disaster becomes inevitable. Nobody went to jail, but everyone paid the bill.

11. Long-Term Capital Management Collapse

Nobel Prize-winning economists ran a hedge fund in 1998 using complex mathematical models that assumed certain market conditions would never happen. Spoiler: they happened. When Russia defaulted on its bonds, Long-Term Capital Management’s sophisticated algorithms failed spectacularly, requiring a $3.6 billion bailout organized by the Federal Reserve to prevent total financial system collapse. Turns out even the smartest people in the room can build a house on bad assumptions. The fund had leveraged itself so aggressively that its failure threatened every major bank. Intelligence doesn’t immunize against hubris, and complicated models can’t predict the unpredictable.

10. The Challenger Disaster

Engineers at Morton Thiokol knew the O-rings in the solid rocket boosters could fail in cold weather. They said so repeatedly. NASA management pressed for launch anyway on January 28, 1986, despite temperatures well below safe parameters. Political pressure and schedule concerns overruled engineering data, and 73 seconds after liftoff, Challenger exploded, killing all seven crew members. The decision wasn’t made in ignorance—it was made despite knowledge. Launch windows and optics mattered more than the people sitting on top of the rocket. Sometimes the worst disasters happen because someone in charge decides the risks don’t apply to them.

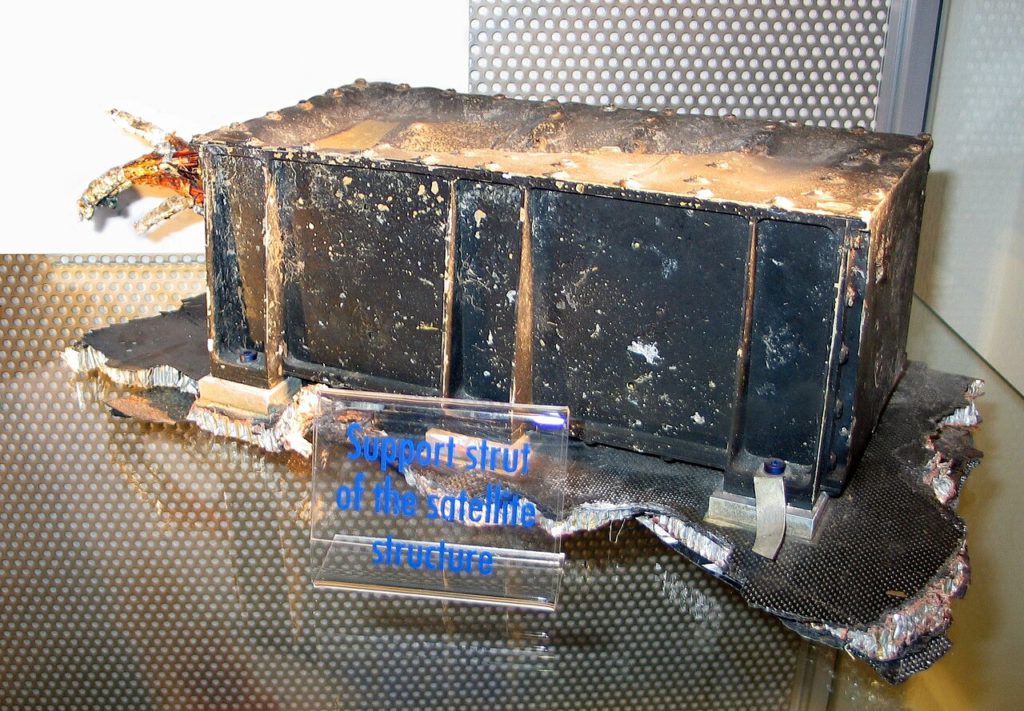

9. The Columbia Disaster

NASA dismissed concerns about foam debris that struck Columbia during launch in 2003, believing it couldn’t cause significant damage. Engineers requested satellite imagery to assess potential harm, but management refused. During re-entry, superheated gas entered through the damaged wing, destroying the orbiter and killing all seven crew members. The agency had learned nothing from Challenger. Seventeen years later, they made the same fundamental mistake: treating engineering concerns as negotiable. The foam strike happened during launch. The disaster happened because someone decided not to look at the evidence.

8. The Bhopal Gas Tragedy

Water entering a storage tank at a Union Carbide pesticide plant triggered a chemical reaction that released toxic methyl isocyanate gas over Bhopal, India, on December 3, 1984. Safety systems had been shut down to save money. Warning alarms failed to alert the surrounding community. Over 3,800 people died immediately, with hundreds of thousands suffering long-term health effects that persist today. The company had cut corners on maintenance, staffing, and emergency protocols. Residents sleeping near the plant woke to a cloud of poison because executives in another country decided safety equipment was too expensive to maintain.

7. Deepwater Horizon

BP’s cost-cutting decisions stacked up like dominoes in 2010, each one bringing the Deepwater Horizon oil rig closer to disaster. The company used cheaper casing designs, ignored negative pressure tests, and dismissed warning signs that the well was unstable. When a blowout occurred, safety systems that should have prevented catastrophe failed across the board. Eleven workers died in the explosion. Nearly 5 million barrels of oil spewed into the Gulf of Mexico over 87 days. The environmental and economic damage reached billions, all traceable to a series of choices where saving money trumped protecting lives and ecosystems.

6. Ariane 5 Rocket Explosion

Software designed for the Ariane 4 rocket was reused in the Ariane 5 without proper testing—a decision that lasted exactly 37 seconds before the rocket exploded in 1996. A software exception caused the guidance system to shut down, destroying the rocket and four expensive scientific satellites worth $370 million total. The code couldn’t handle the Ariane 5’s faster acceleration, triggering an overflow error that brought down the entire system. Engineers had assumed old code would work in the new rocket because it seemed close enough. In aerospace, “close enough” gets expensive fast.

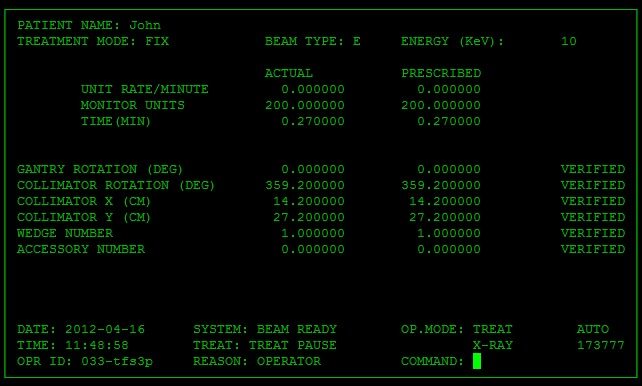

5. Therac-25 Radiation Therapy

Six patients died between 1985 and 1987 because a radiation therapy machine’s software bugs caused massive overdoses. The Therac-25’s interface allowed technicians to accidentally trigger lethal radiation doses while displaying error messages that looked routine enough to ignore. Poor software design combined with inadequate safety interlocks created a machine that could kill the people it was built to heal. Developers had removed hardware safety features present in earlier models, trusting software alone to prevent disasters. That trust proved fatal. Medical device regulations got a lot stricter after Therac-25 demonstrated what happens when code becomes the only thing standing between patients and death.

4. Korean Air Flight 007

Navigation errors sent Korean Air Lines Flight 007 deep into Soviet airspace on September 1, 1983, over some of the most sensitive military installations in the USSR. The crew had incorrectly programmed their autopilot on the Boeing 747, and nobody noticed the deviation for hours. Soviet interceptors shot down the civilian airliner, killing all 269 people aboard, because Cold War communication protocols couldn’t distinguish between a lost passenger jet and a spy plane. The tragedy was entirely preventable—basic navigation checks would have caught the error. Instead, innocent people died because pilots trusted their instruments without verifying the settings.

3. Tenerife Airport Disaster

Radio miscommunication killed 583 people on March 27, 1977, when two Boeing 747s collided on a fog-shrouded runway in aviation’s deadliest accident. KLM Flight 4805 began takeoff while Pan Am Flight 1736 was still on the runway. Poor radio procedures, non-standard phraseology, and frequency congestion meant neither crew understood what the other was doing. The KLM captain was experienced and respected, which made him confident enough to start his takeoff roll without explicit clearance. Hierarchy and poor communication protocols turned a misunderstanding into mass casualty. Clear language could have prevented everything.

2. FirstEnergy 2003 Blackout

Inadequate tree trimming near power lines in Ohio triggered a cascade failure that left 50 million people without electricity on August 14, 2003. FirstEnergy ignored alarms and failed to notify regional operators when their system became unstable, allowing a local problem to spread across the entire Northeast and parts of Canada. Deregulation and cost-cutting had weakened grid reliability, and utilities prioritized profits over maintenance. Trees touching high-voltage lines should never cause a regional blackout, but when every safety margin gets shaved away to boost quarterly earnings, small problems become catastrophic. The blackout demonstrated what happens when infrastructure maintenance becomes optional.

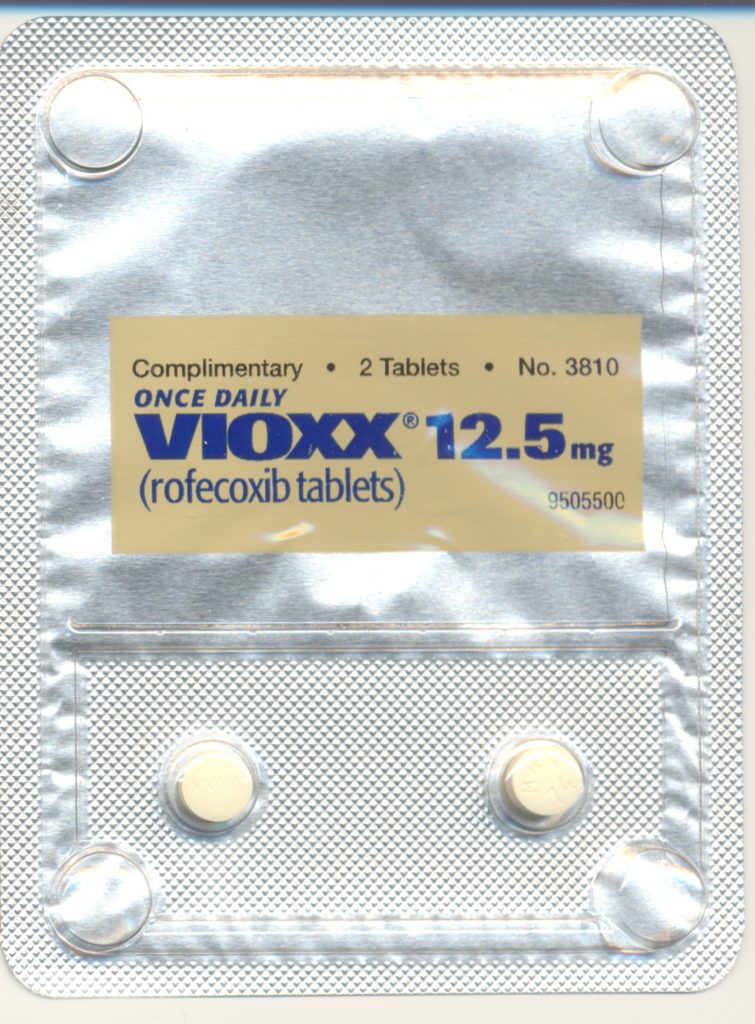

1. Vioxx

Merck continued marketing Vioxx for arthritis pain even after internal studies showed increased heart attack risk, leading to an estimated 88,000 to 140,000 excess cases of serious heart disease before the drug’s 2004 withdrawal. The FDA had approved Vioxx despite cardiovascular concerns, and post-market surveillance failed to catch the danger until thousands had already suffered. Pharmaceutical companies and regulators both dropped the ball, prioritizing drug approval speed over patient safety. The scandal exposed weaknesses in how medications are monitored after hitting the market. Turns out the approval process is just the beginning, and watching what happens afterward matters just as much.