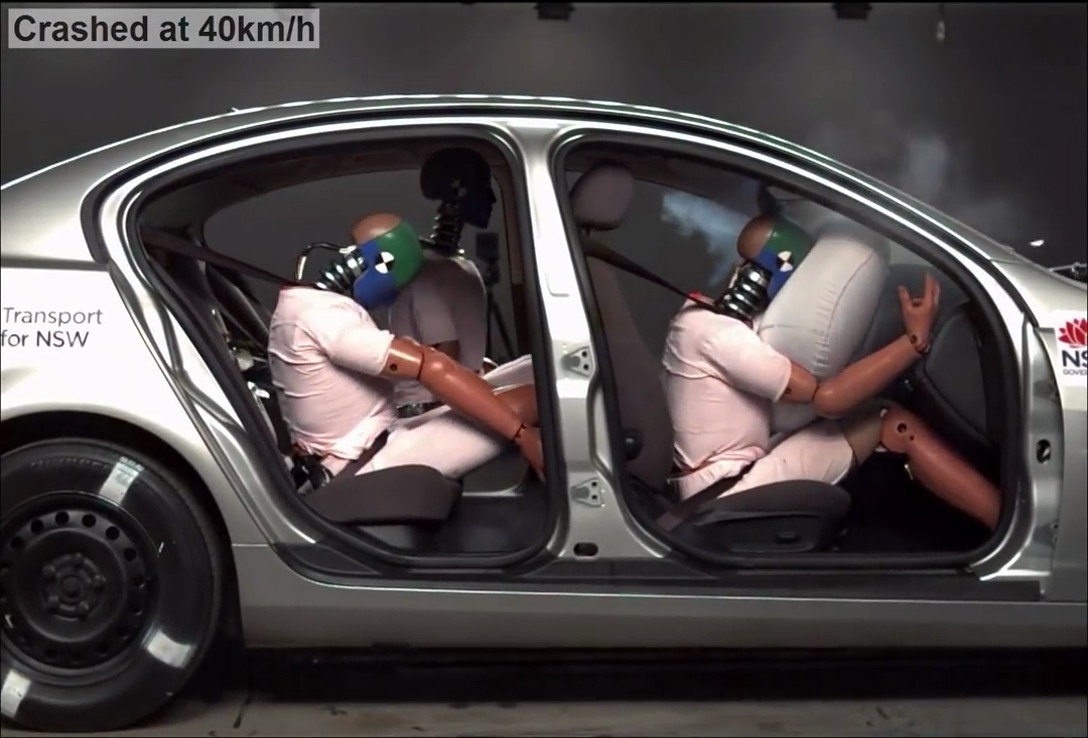

Google DeepMind has achieved a significant milestone in robotics by developing a table tennis robot that can play at a “solidly amateur human level” against human opponents. This breakthrough, reported in a newly published paper titled “Achieving Human-Level Competitive Robot Table Tennis,” marks a new benchmark in robot learning and control.

The robot won 13 out of 29 games against human players, achieving an impressive 45% win rate across various skill levels. It dominated beginner-level players, winning all matches, and held its own against intermediate players with a 55% win rate. However, the robot struggled against advanced players, losing every game.

“This is the first robot agent capable of playing a sport with humans at a human level and represents a milestone in robot learning and control,” the paper states. “However, it is also only a small step towards a long-standing goal in robotics of achieving human-level performance on many useful real-world skills. A lot of work remains in order to consistently achieve human-level performance on single tasks, and then beyond, in building generalist robots that are capable of performing many useful tasks, skillfully and safely interacting with humans in the real world.”

Table tennis has been a key testbed for robots since the 1980s, requiring speed, responsiveness, and strategic play. Google DeepMind’s achievement builds upon the legacy of robots in sports, such as the annual RoboCup soccer competition, which dates back to the mid-1990s.

As Techcrunch reports, despite its success, the current system has limitations, particularly in latency and reaction time when dealing with fastballs. Researchers propose addressing these issues through advanced control algorithms, hardware optimizations, predictive models, and faster communication protocols between sensors and actuators.

The robot’s learning and adaptation capabilities are enhanced by using simulation and real-world data. Popsci points out that Engineers compiled a large dataset of initial table tennis ball states, including positionality, spin, and speed, for the AI system to practice in physically accurate virtual simulations. This data was then analyzed in simulations again, creating a continuous feedback loop of learning.

This technology has potential applications beyond table tennis, such as policy architecture, simulation-based operation in real games, and real-time strategy adaptation. These advancements could impact various areas of robotics and human-robot interaction, like developing generalist robots capable of performing many useful tasks and interacting safely with humans in the real world.

As research continues, Google DeepMind aims to address the robot’s limitations, improve its reaction time, and boost its performance against advanced players. The team is also exploring applications beyond table tennis in robotics and human-robot interaction, striving towards human-level performance on many useful real-world skills.

Image credit: Google Deepmind