Dead phone batteries kill productivity, but losing your AI assistant when the WiFi drops? That’s a special kind of modern hell, like trying to explain TikTok trends to your parents without Google Translate.

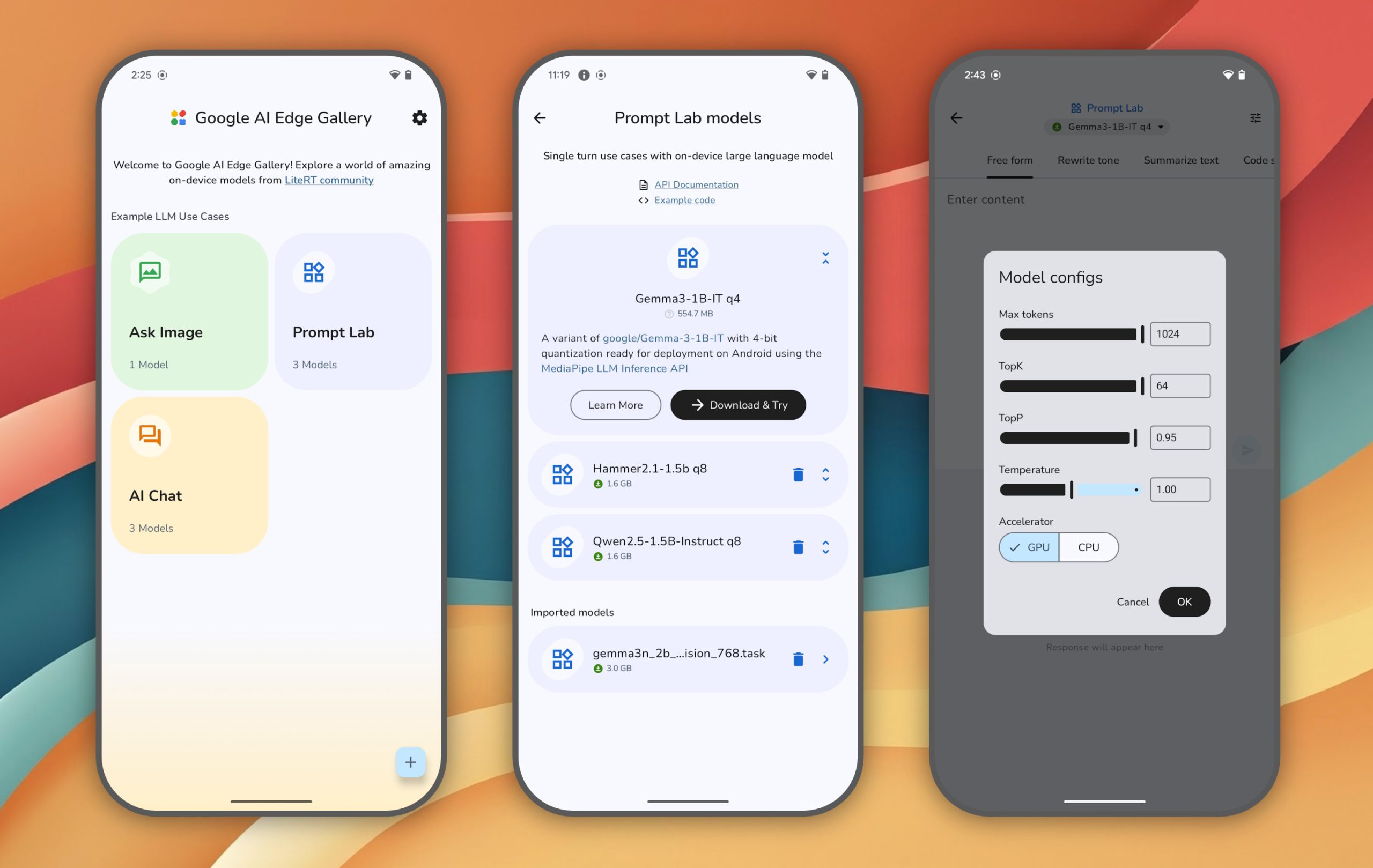

Google just quietly launched a new contender in the AI race: an experimental app called AI Edge Gallery that tackles real-world tasks without relying on the cloud. While most headlines fixate on ChatGPT’s online capabilities, this tool runs directly on your device, highlighting how some of the most underrated AI tools are redefining performance by working offline and flying under the radar.

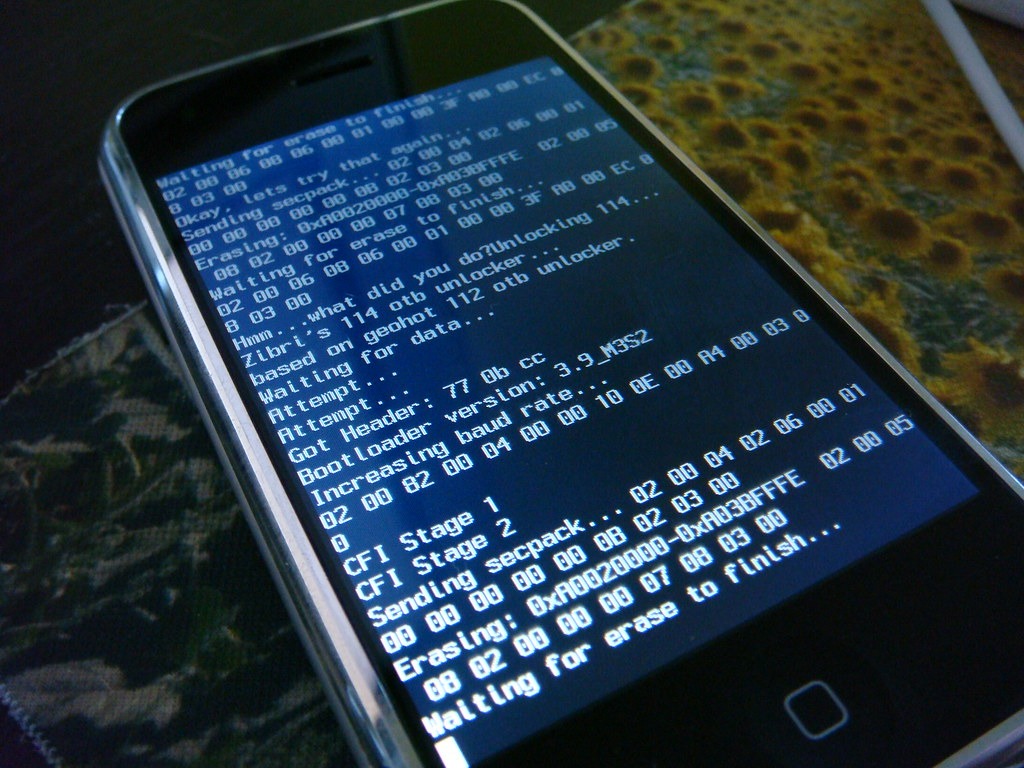

Unlike Apple’s intelligence that’s locked to specific hardware or Microsoft’s cloud-dependent Edge AI, Google’s solution works across any modern Android device. The app lets you download AI models from Hugging Face and run them completely offline.

You can now generate images, chat with AI, write code, and even analyze photos—entirely offline. This shift reflects how Google Gemini’s photo-editing breakthrough is turning your device into a multilingual creative studio, no longer tethered to the cloud.

Your data paranoia finally makes sense. Since everything runs locally, there’s no risk of your conversations, images, or prompts being intercepted, stored, or analyzed by remote servers. Processing happens directly on your device, eliminating server delays. For more on how this works, check out our local AI explainer.

The catch? Performance depends heavily on your phone’s hardware. Your battery will drain faster during intensive AI tasks, and older devices might struggle with larger models. Current limitations include model size constraints and no voice interaction—yet.

Google’s GEMMA 3n model is one of the supported options, offering advanced capabilities for coding and chat. And if you care about privacy by keeping all data on your device, read our deep dive on mobile data privacy.

Google calls this an “experimental Alpha release” and wants developer feedback. The app uses Apache 2.0 licensing, meaning it’s open source and free for commercial use. Currently available on Android via GitHub download, with iOS support coming soon.

This shift toward local AI vs cloud AI processing represents something bigger than just another app. Your smartphone is becoming less dependent on constant connectivity and more capable of independent intelligence. That’s either the future we’ve been waiting for, or the beginning of our phones getting too smart for their own good.