You’ve probably felt that annoying twinge when someone with authority holds you to rules they don’t follow themselves. That’s exactly what happened to Ella Stapleton, a business major at Northeastern University, who uncovered her professor’s digital hypocrisy spectacularly. The New York Times writes that she filed a formal complaint after discovering the professor used ChatGPT to generate lecture notes and presentation slides, despite banning students from using the tool

Stapleton wasn’t initially hunting for inconsistencies. She simply noticed something odd in her organizational behavior class notes – an instruction to “expand on all areas” and “be more detailed and specific.” If that sounds suspiciously like someone talking to ChatGPT, that’s because it was.

The Digital Paper Trail

Further digging revealed a treasure trove of AI fingerprints that would make any ChatGPT user blush. The professor’s materials featured the classic AI dead giveaways: citations listing “ChatGPT” as a source, those extra fingers that AI can’t seem to stop adding to human hands in generated images, and fonts that mysteriously changed mid-document, fueling fresh debate around AI replacing educators in ways we never imagined.

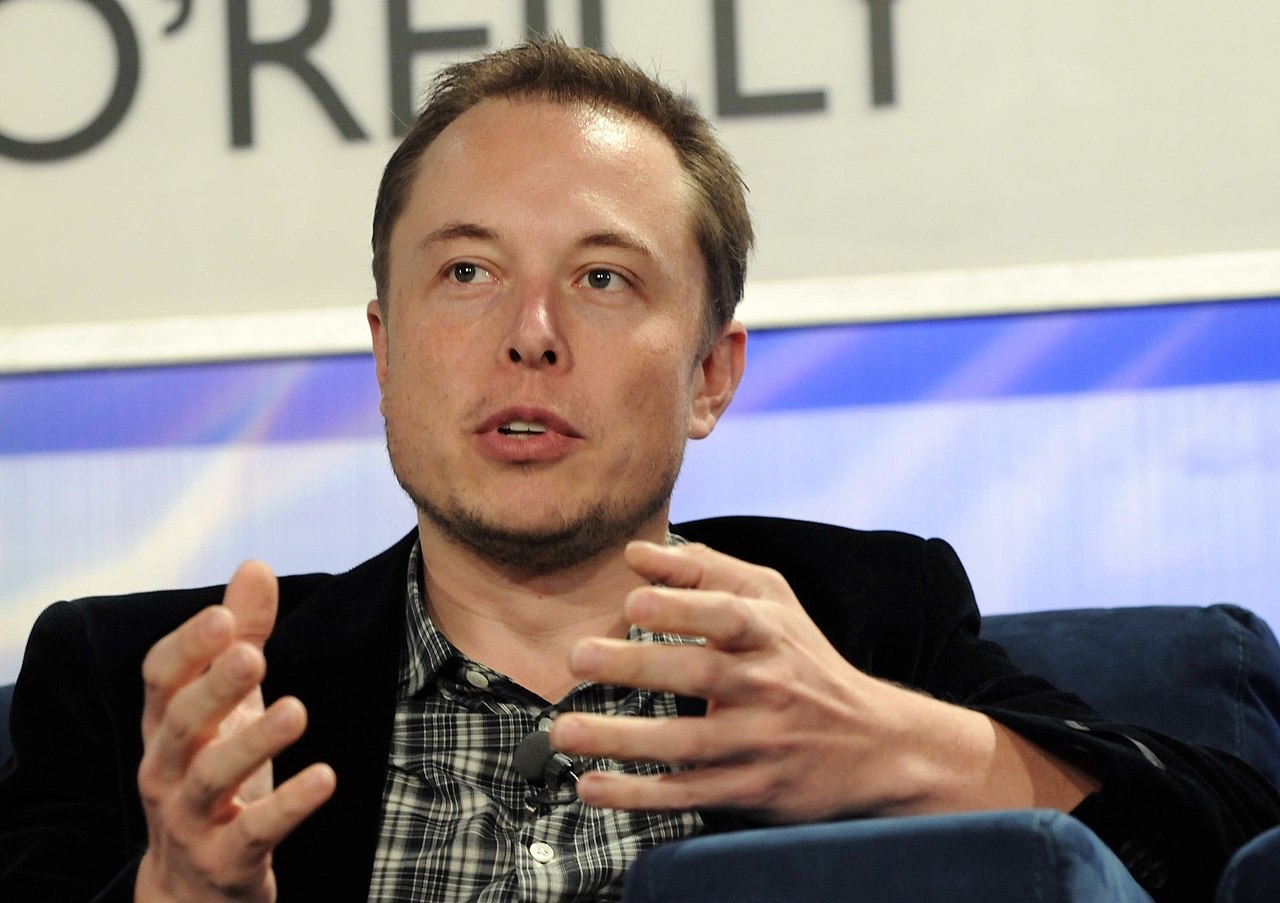

The kicker? This same professor, Rick Arrowood, had explicit policies forbidding students from using AI tools for assignments, with steep penalties for those caught.

When confronted, Arrowood admitted to using AI tools including ChatGPT, Perplexity AI, and Gamma to “refresh” his teaching materials. His response had all the self-awareness of someone caught with cookie crumbs while enforcing a strict no-snacking policy.

He’s now convinced professors should think harder about using AI and disclose to their students when and how it’s used — a new stance indicating that the debacle was, for him, a teachable moment. Arrowood conceded, in what might be the academic understatement of 2025.

Stapleton wasn’t having it. “He’s telling us not to use it, and then he’s using it himself,” she pointed out, with the righteous indignation of someone paying over $8,000 for allegedly handcrafted educational content.

The Refund Battle

Armed with evidence that would make a digital forensics expert proud, Stapleton filed a formal complaint demanding a full refund. Her argument was simple: you can’t charge premium prices for AI-generated content while simultaneously banning students from the same tools.

Despite multiple meetings and clear documentation, Northeastern University denied her refund request. Apparently, the irony of enforcing different standards for faculty and students was lost on administration officials.

What makes this clash particularly fascinating is the generational divide it exposes. For Gen Z students like Stapleton, AI tools are simply part of their technological ecosystem, not unlike how millennials view smartphones. They expect either consistent rules for everyone or transparent acknowledgment of AI’s role in education.

This incident isn’t just about one student’s tuition battle. It’s the canary in the coal mine for academic institutions struggling to navigate AI integration in education.

The solution seems obvious: universities need comprehensive AI policies that apply equally to students and faculty. This means either allowing students the same AI access as professors (with proper attribution) or establishing AI-free zones where human-generated work is required from everyone. Anything else is just expensive hypocrisy wrapped in academic jargon.

As for Stapleton’s $8,000? That might be gone, but her case has already sparked a much more valuable conversation about fairness in the AI age.