Recording calls for quick cash seemed harmless until strangers started listening to your private conversations. The Neon app, which rocketed to viral success by paying users to record phone calls for AI training data, exposed every user’s personal information through a security flaw that made their most intimate conversations accessible to anyone.

The Easy Money Trap

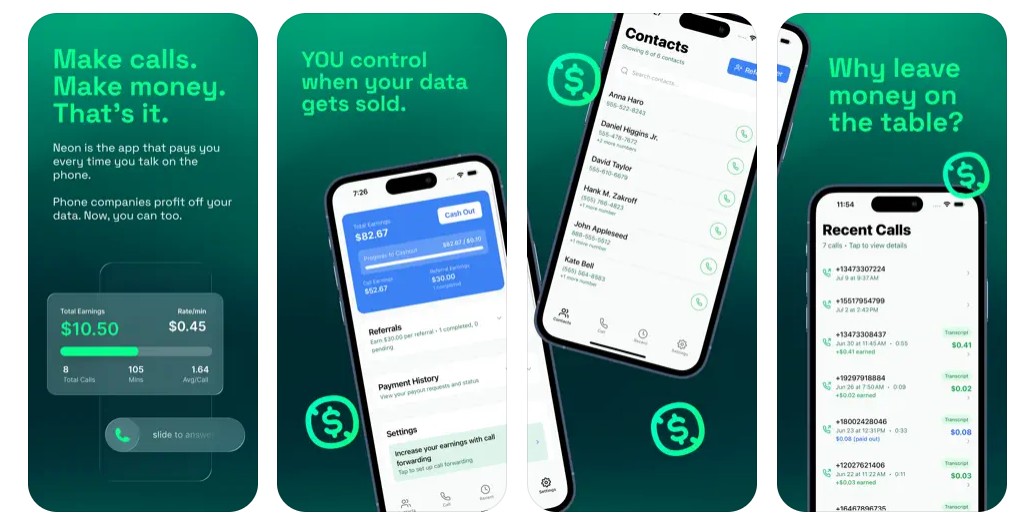

Users earned pennies per call while unknowingly creating a data goldmine for AI companies.

Neon’s premise was seductive in its simplicity: record your phone calls, get paid, help train AI models. The app incentivized lengthy conversations, with some users making extended calls specifically to maximize earnings. Within days of launch, Neon climbed to the top-five free iPhone apps with over 75,000 downloads in a single day, according to TechCrunch. Users saw dollar signs. AI companies saw training data. Nobody saw the security disaster brewing in the backend.

A Master Key for Everyone

Any logged-in user could access every other user’s calls, transcripts, and phone numbers.

TechCrunch security researchers discovered Neon’s server configuration had zero access controls. Any authenticated user could manipulate basic API calls to access the entire database—phone numbers, raw audio files, full transcripts, call durations, and earnings data. The backend essentially handed every user a master key to everyone else’s private information. Your grandmother’s medical appointment, your job interview, your relationship drama—all available to any stranger with basic technical knowledge.

The Silent Shutdown

Founder Alex Kiam pulled the app offline without disclosing the breach to users.

When confronted with the security failure, Stanford-educated founder Alex Kiam quietly took Neon offline. Users received vague emails about “extra layers of security” with no mention of the massive data exposure. No breach notification. No acknowledgment that private phone numbers and intimate conversations had been accessible to anyone who bothered to look. The transparency you’d expect from a platform handling your most sensitive data? Nowhere to be found.

The Bigger Picture

Neon joins a growing list of apps that prioritize rapid scaling over user protection.

This pattern keeps repeating across the industry. Apps like Tea, Bumble, and Hinge have all suffered exposure scandals, yet platforms keep prioritizing viral growth over basic security. The AI gold rush has created a new category of risk: apps that monetize your personal data while treating security as an afterthought. When making money from your conversations becomes the business model, protecting those conversations apparently becomes optional.

Your private calls shouldn’t be someone else’s payday—or exploitation.