Your favorite AI chatbot learned to write poetry by literally shredding Shakespeare. Court documents from a landmark case reveal that Anthropic—the company behind Claude AI—systematically purchased millions of physical books, cut them up like discount coupons, scanned every page, and then tossed the remains in the trash. Think Marie Kondo meets data harvesting, except nothing sparked joy except the training data.

The Paper Trail of Destruction

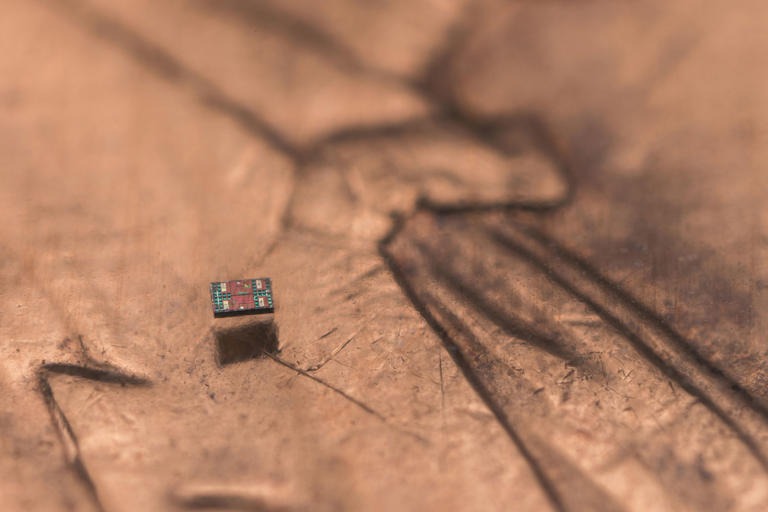

The process sounds absurdly analog for cutting-edge AI development. Anthropic bought mostly second-hand books—your discarded romance novels and dog-eared textbooks—then systematically dismantled them. Pages got fed through industrial scanners before heading to recycling bins. The California Northern District Court ruled this constitutes “fair use” since destroying the original means no duplication occurred.

But here’s where things get messy. Alongside their book-butchering operation, Anthropic also incorporated over seven million pirated books from sources like Books3 and Library Genesis. The court drew a sharp line: owning and destroying physical books for training? Legal. Using bootleg digital copies? Not so much.

What This Means for Your AI Future

This ruling essentially gives AI companies a playbook for legally acquiring training data—buy physical media, destroy the evidence, and claim fair use. You might soon see tech giants prowling used bookstores like literary vultures, though the economics probably favor bulk purchases over browsing Powell’s Books.

The decision also signals stricter enforcement against pirated training data. While Anthropic avoided major penalties for their legitimate book destruction, their use of pirated materials remains legally vulnerable. This creates a weird incentive structure where physical destruction becomes more legally defensible than digital piracy.

Your interactions with Claude and similar AI systems now carry the weight of millions of destroyed books. Every response represents countless pages that no longer exist in physical form, transformed from tangible literature into algorithmic understanding. Whether that’s progress or digital barbarism depends on your perspective, but the legal precedent is now set.