Despite its reputation, our investigation found Consumer Reports provides no test data for most of its reviews, especially in categories like consumer electronics and small appliances, with many reviews templated and duplicated.

With $200M in annual revenue, Consumer Reports (CR) has positioned itself as the gold standard for unbiased reviews, claiming to deliver “100% unbiased” recommendations and “No fake reviews.”

However, our findings tell a different story. Leveraging its reputation, CR has built an enormous reach: it boasts the largest print circulation of any product review brand in America and attracts 14.43 million unique monthly visitors to its website, with 42% of that traffic coming from Google alone, according to SimilarWeb.

Yet despite their vast reach and financial power, our investigation revealed significant gaps that call the credibility of their testing process into question. We even reached out to Consumer Reports on November 8, 2024, for clarification but received no response.

Why would anyone do this? What’s the motive? There’s a simple explanation: affiliate marketing pulls in an astounding $200 billion annually, creating immense financial incentives for publishers to prioritize revenue over accuracy.

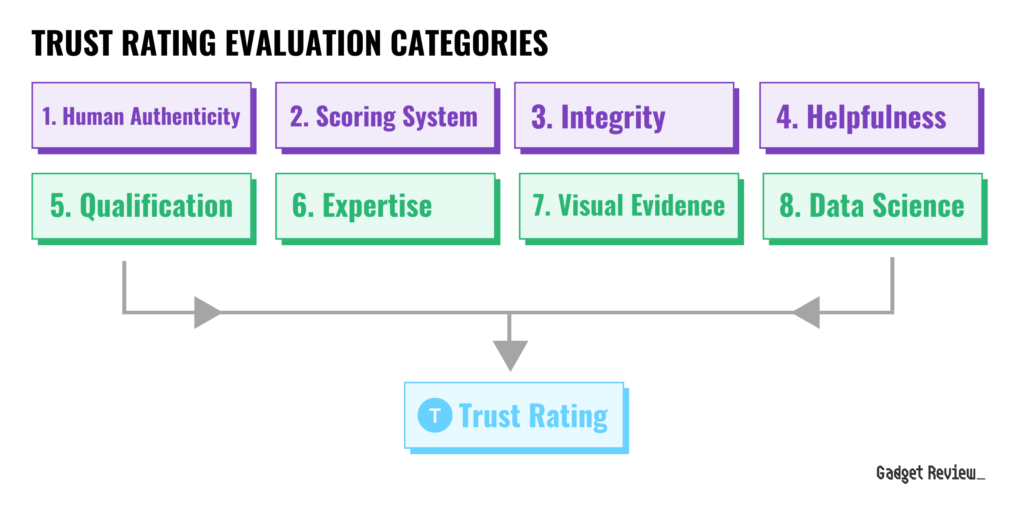

As 20-year product testers, we saw this trend firsthand and created a Trust Rating system to analyze whether a product reviewer genuinely tests the products they claim to evaluate.

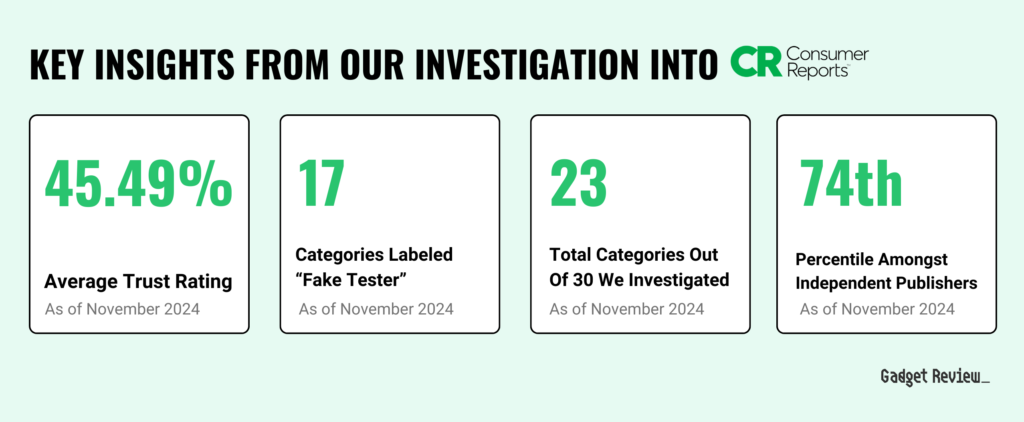

Here’s what we found during our investigation:

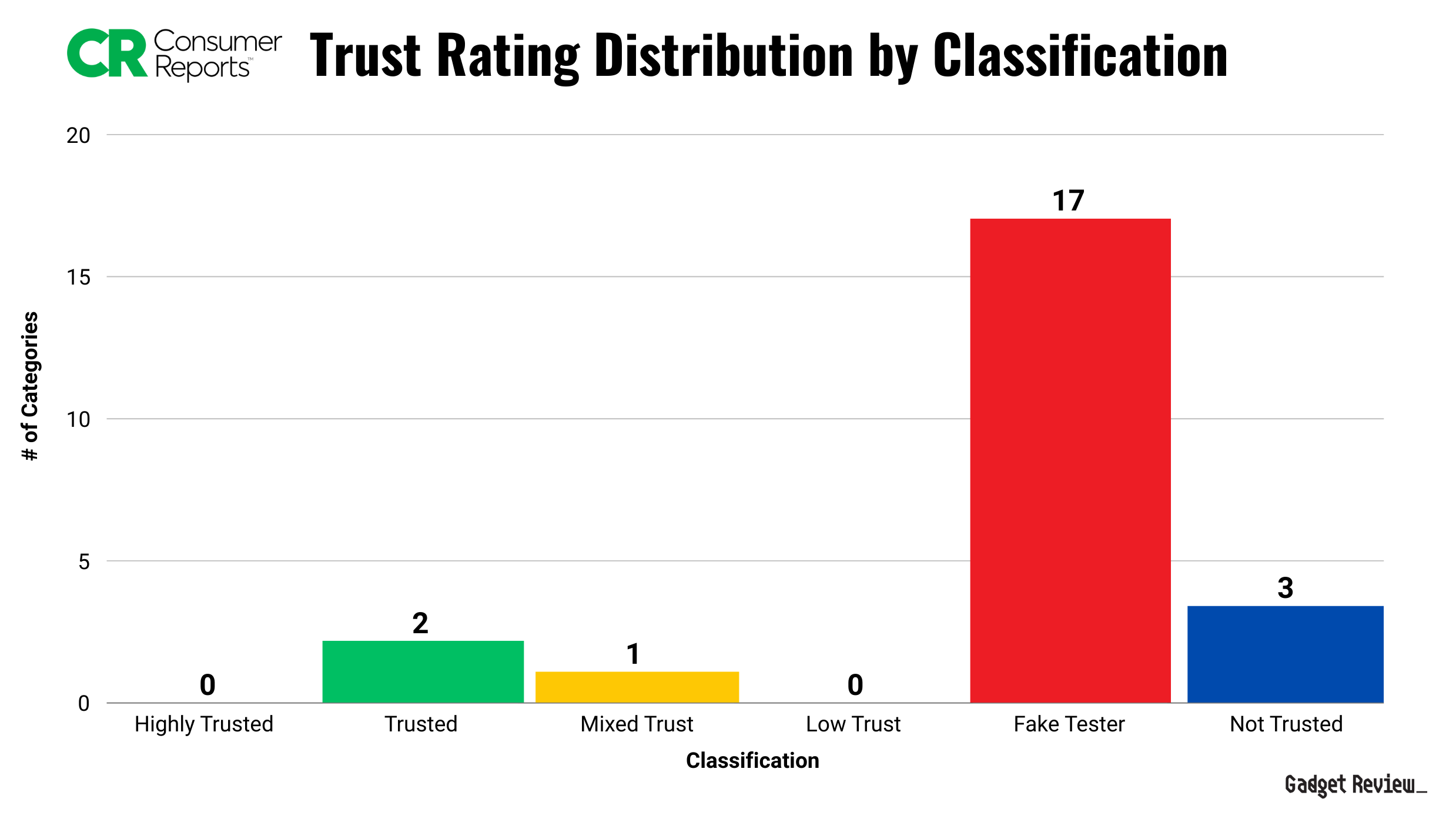

- Alarmingly, 17 of the 23 categories we investigated earned Consumer Reports our “Fake Reviewer” classification. Only three categories showed evidence of credible testing.

- Their reviews often follow a cookie-cutter format, with the same sentences repeated in nearly identical paragraphs used across different products.

- Claims of rigorous testing are undermined by vague terms like “excellent,” with no measurable results or real-world photos.

- Despite its massive revenues, CR relies on vague scores with little or no test data for readers to evaluate independently.

- Reviews also lack visual proof, such as photos or videos of testing, raising doubts about whether CR evaluates products as rigorously as it claims.

- CR scored just 45.49% on our Trust Rating, which is a failing grade.

To make ourselves clear, we’re not saying there’s irrefutable proof of fraud, but we found enough circumstantial evidence to seriously question the extent of CR’s product testing.

While evidence of credible testing exists in three categories, in 17 others there was a notable absence of real-world photos, measurable test results, or anything beyond templated reviews despite them claiming to test the products.

In the remaining three categories labeled “Not Trusted”, they don’t claim to test the products, but the reviews aren’t helpful.

Below, you’ll find a detailed explanation of how the Trust Rating works, measuring the level of transparency, expertise, and data science in product reviews.

This isn’t just a Consumer Reports issue. Over a three-year investigation of nearly 500 product reviewers, we found:

- 85% of review sites are unreliable, cutting corners in their evaluations.

- 45% of these sites fake their testing entirely, relying on duplicative or fabricated content.

Even trusted names like Good Housekeeping and Popular Mechanics show similar issues.

The best reviewers we analyzed, like RTINGS and Wirecutter, set the standard for transparency.

They consistently provide detailed test data, share visual proof like photos and videos of their testing, and use data science-backed methodologies to substantiate their claims. Consumer Reports, by comparison, lacks the transparency and evidence needed to support its recommendations, leaving consumers in the dark.

With CR claiming to be the leader in trust and reliability, their low Trust Rating and duplicated reviews cannot be overlooked.

Cookie-Cutter, Templatized Reviews

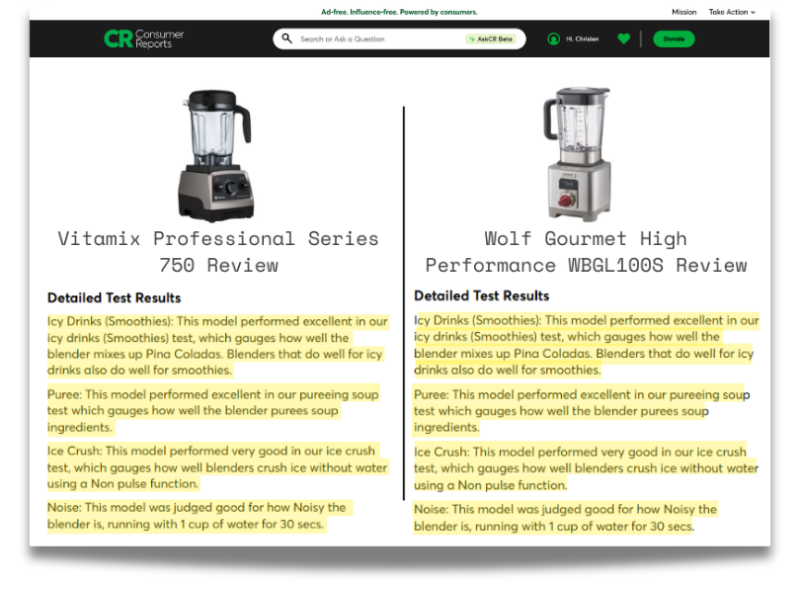

CR’s reviews often rely on repetitive, templated language, especially in high-demand categories like air purifiers, monitors, blenders, routers and TVs. Sometimes the written sections are 100% duplicated, like what we discovered in CR’s blender category. In many other categories, most of the reviews are duplicated with only minor tweaks, still copying and pasting identical, unhelpful phrases like “excellent picture quality” or “very good sound” across reviews.

Blenders was the worst case of these tokenized reviews that we came across. Check out the reviews for the Vitamix Professional Series 750 and the Wolf Gourmet High Performance WBGL100S below. The “Detailed Test results” Sections? Exact duplicates, as you can see by the highlighted parts.

It seems whoever put these reviews together didn’t even try to change the wording up between the reviews.

Still don’t believe us? Take a look at these other sections reviews and see for yourself.

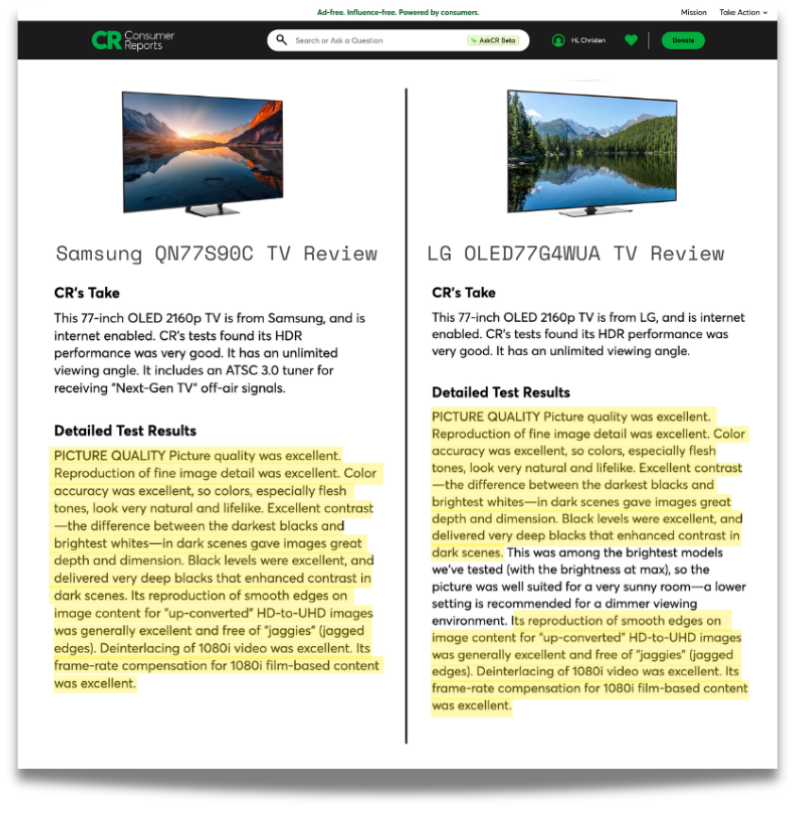

Lets take a look at two TV reviews for an LG OLED77G4WUA and a Samsung QN77S90C, both 77 inch OLED TVs. If you look closely, the content is heavily tokenized.

The two reviews’ paragraphs above are the exact same except for one sentence, the one not highlighted in a color.

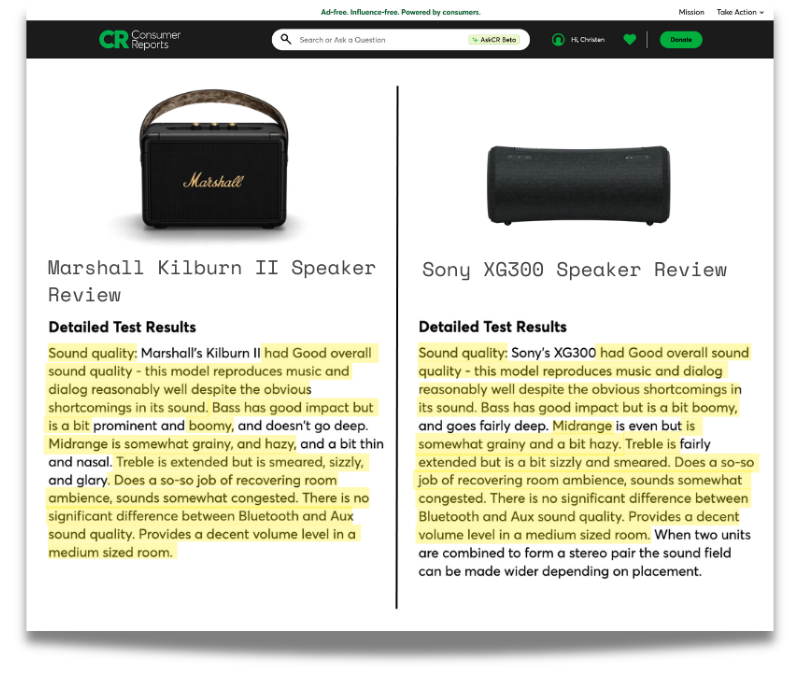

In their speakers category, have a look at their Marshall Kilburn II and Sony XG300 review below. Same thing with TVs, most of the sentences are exact duplicates of each other.

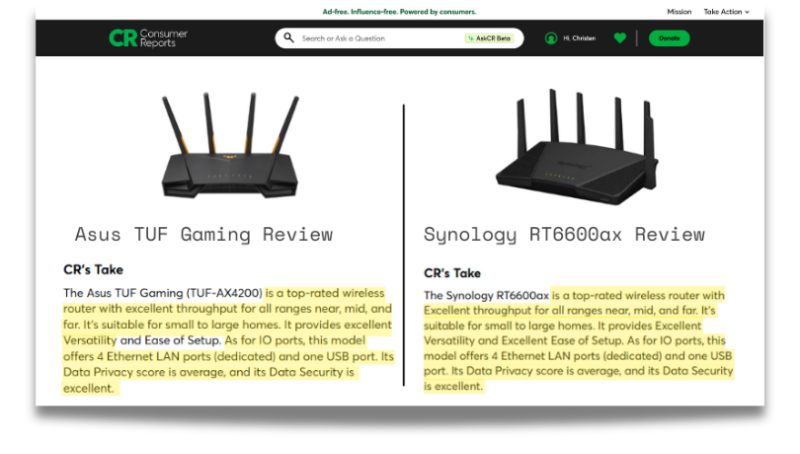

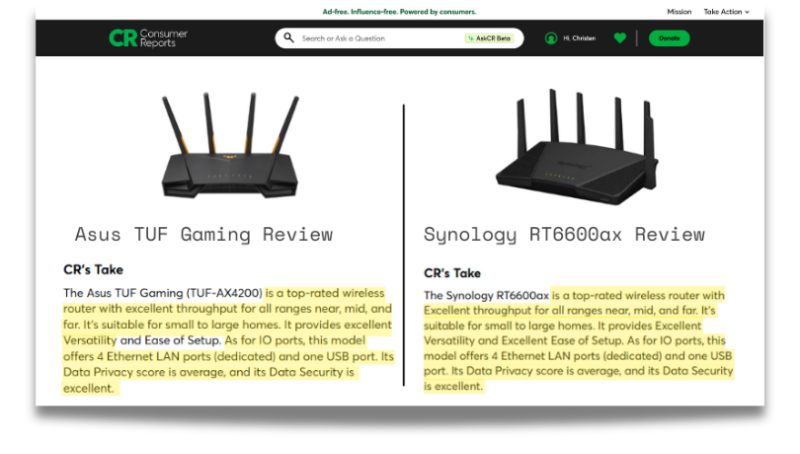

This happens in routers as well. Look at their Asus TUF Gaming review and Synology RT6600ax review. These written review sections are even shorter than those for TVs and speakers, with no “Detailed Test Results” section included. Yet, they still feature the “CR’s Take” sections, which are nearly identical—only the router model names have been swapped in.

In place of hard data, the section includes statements like “contrast is excellent,” without any actual test measurements or equipment details, like luminance meters or colorimeters, that would indicate proper testing took place. It’s as if the word “tested” is being used as a placeholder, rather than an assurance of rigor.

Where’s the Test Data?

One of the biggest revelations from our investigation? In 17 of 23 CR categories, they consistently withhold test results for the products they review and claim to test, thus giving the category the “Fake Tester” classification.

You can see the Trust Ratings for all 23 CR categories and how we graded each below.

We do trust CR in three categories. For coffee makers (84.53% Trust Rating), printers (71.60%), and office chairs (67.60%), CR provides some quantitative data. For instance, in coffee makers, they measured brew time within a specific temperature range (Indicator 8.7).

Here’s the catch though: these are among the easiest categories to test. The equipment is affordable and accessible. For coffee makers, you just need a timer, thermometer, and pH meter to test the most important criteria like brew flavor, temperature, and speed. But what happens with more complex categories, like TVs, where testing requires expensive gear like colorimeters to measure brightness and color accuracy? In those cases, CR’s Trust Ratings fall drastically. For TVs, they scored a failing 47.60%, and speakers earned their lowest score of 19.60%.

Testing in these categories demands costly, sophisticated equipment. For example, testing air purifiers requires particle counters, which range from $200 to $3,000. Colorimeters for TVs and monitors start around $200 and can soar to $5,000. For speakers, a dedicated testing chamber that isolates sound for accurate measurements can cost $500 to $5,000.

So CR sometimes provides quantitative test data in a few categories while leaving others without any. This inconsistency makes it hard to know the true extent of CR’s testing practices.

Now here’s what we mean by this pattern of not showing test results in most of their categories. We’ll start with TVs.

How well does CR test TVs?

Terribly. Again, they only received a 47.60% Trust Rating for their TV category.

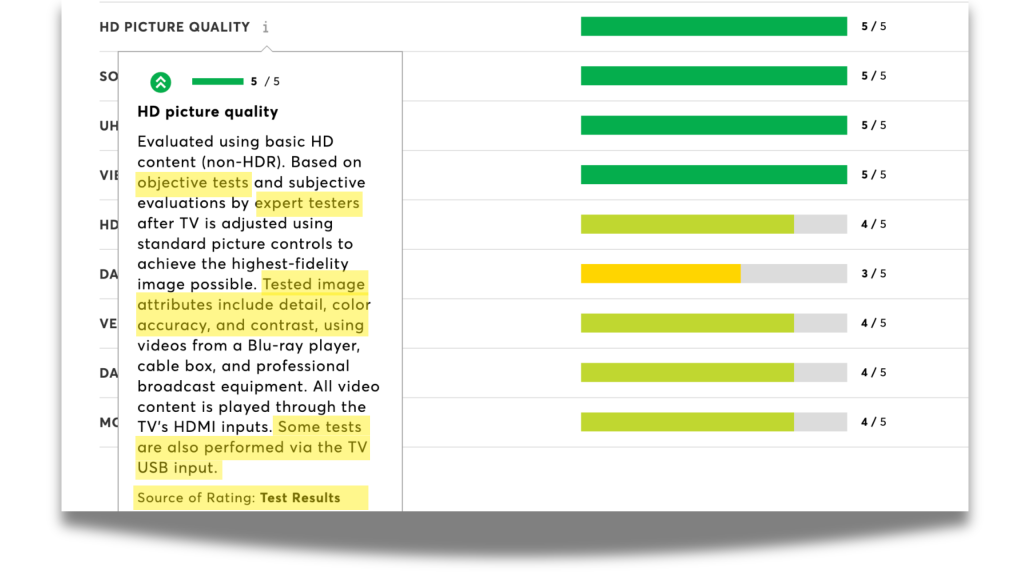

Let’s take a look at this LG TV review’s Results section. It shows that CR rates TVs based on multiple criteria, many of which we look for with the Custom Questions in the Trust Rating’s Data Science category (like Color and Picture Quality).

At first glance, you’d think that this review of this LG TV looks pretty thorough and reliable. But a score out of 5 is pretty basic.

If these TVs were truly being tested, we’d expect the reviews to include detailed results, precise units of measurement (Indicator 8.4), and a list of testing equipment used (Indicator 7.3).

So the score out of 5 isn’t very helpful. How did this LG TV get a perfect score on Picture Quality? Where are test results to back up that 5/5? Luckily, there’s a tooltip that should go further in-depth and show the actual test results, right?

Wrong. Unfortunately, when you mouse over the tooltips to get more information on their bizarrely simple scoring, there isn’t much beyond additional claims about the various criteria they tested.

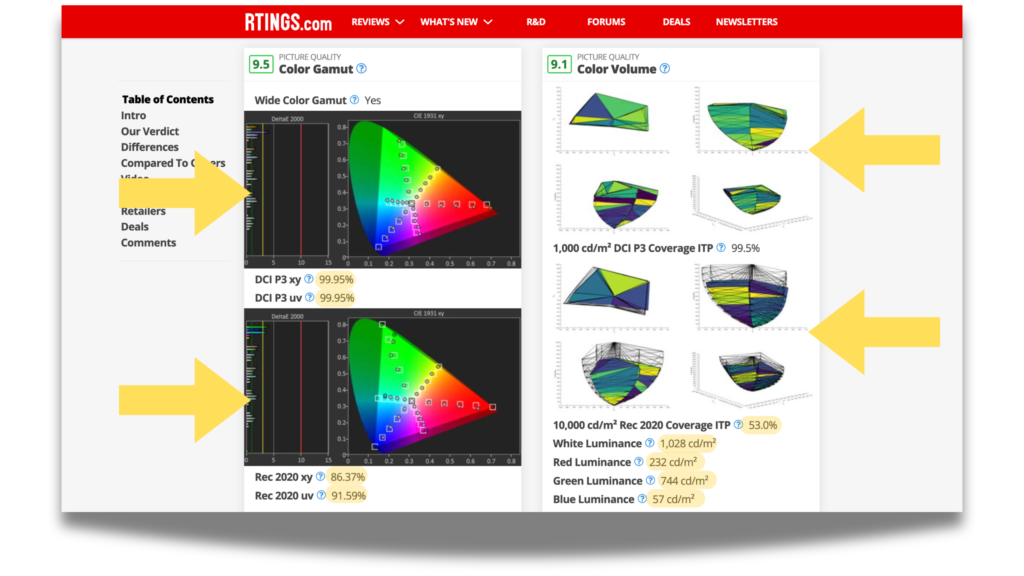

When testing picture quality, a video calibrator would use a colorimeter to measure brightness in nits such as 422 cd/m². Yet, this key measurement–as well as others such as color gamut and contrast ratio–is missing in every review.

Genuine testing produces actual hard data, but there isn’t any here, just an explanation about what they were testing for.

You may try scrolling down the review to find any sort of test result. Then you’ll spot a Detailed Test Results section that must surely contain the test results to back up their scores… shouldn’t it?

Turns out it’s the exact opposite of “detailed”. Even in the section that would seem most likely to provide test, there’s just qualitative language with no quantitative data. There are no color gamut graphs, no contrast screens, and no brightness measurements. Instead, we get statements like “brightness is very good” or “contrast is excellent.”

If they were really testing contrast ratio, they’d provide a quantitative test result in an x:y format and mention the luminance meter they used.

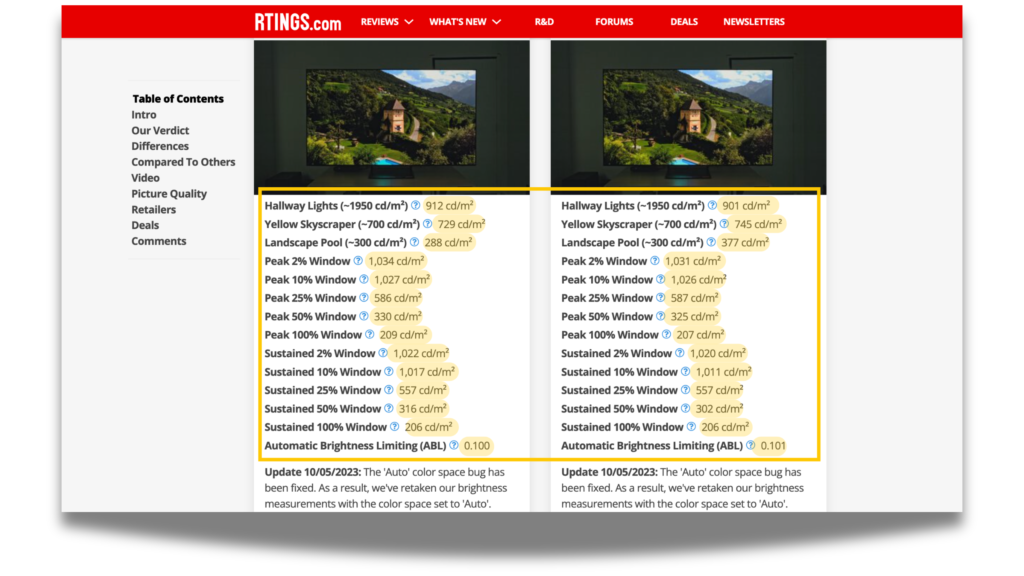

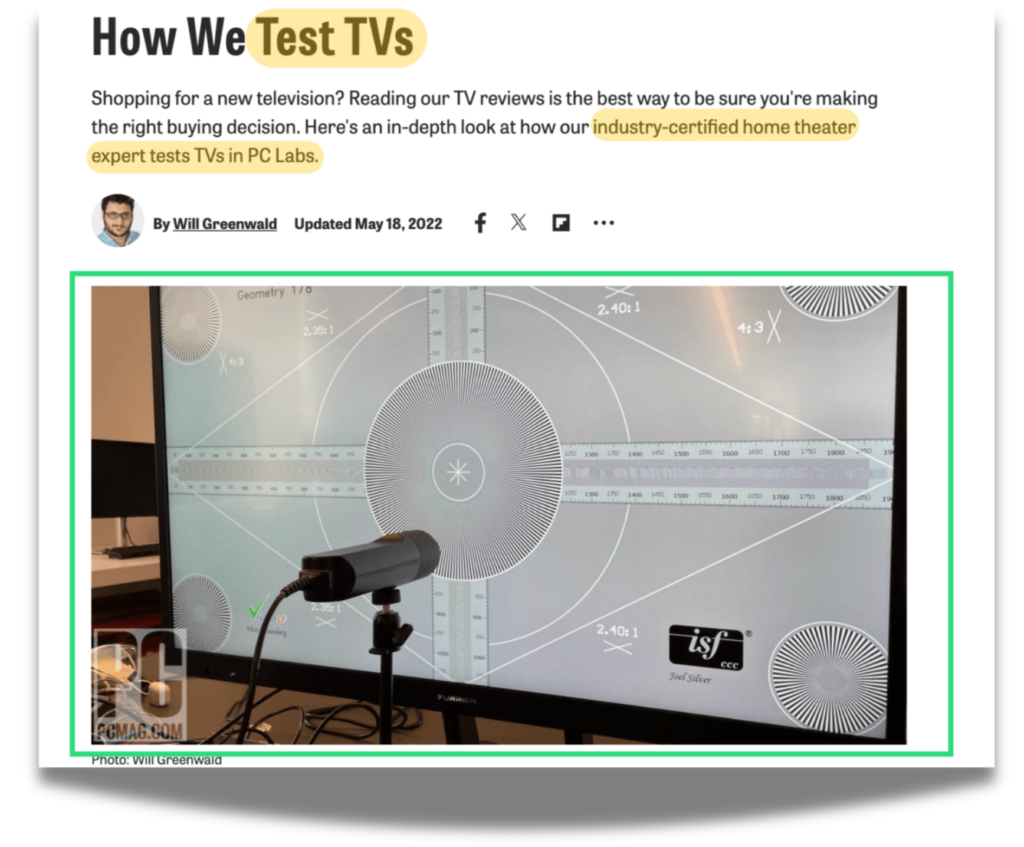

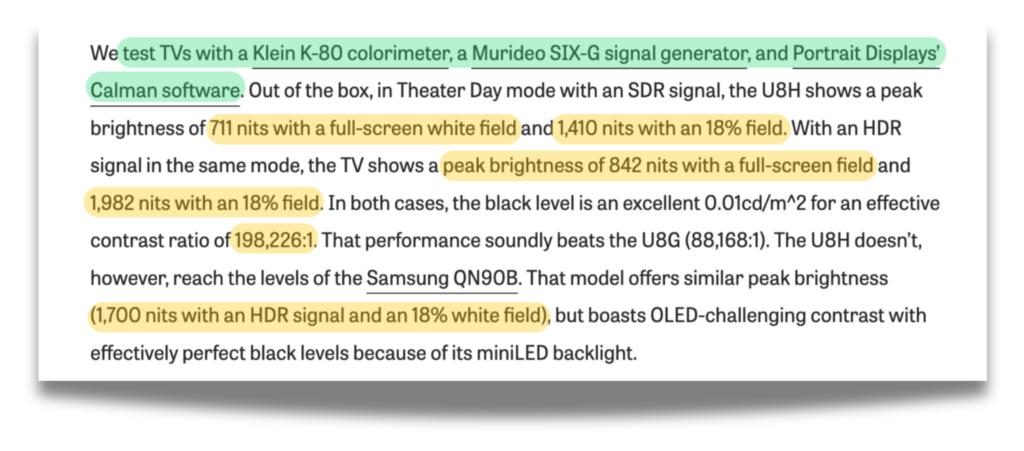

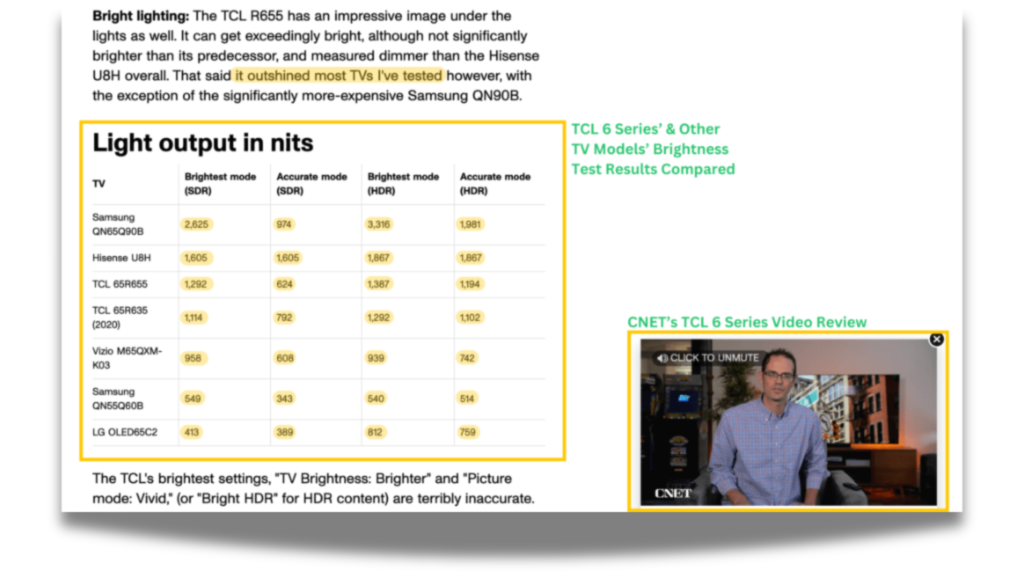

In contrast, the top three trusted TV reviewers—RTINGs, PCMag, and CNET—demonstrate clear, well-documented testing.

- RTINGs scored an outstanding 101.40%, providing detailed, unique reviews that include real images and screenshots of test results in specialized software, like color gamut graphs analyzed in CalMan software.

- PCMag, ranked #2 with a solid 95.60%, also demonstrates clear evidence of testing, noting tools like the Klein K-80 colorimeter to measure a TV’s brightness in nits.

- CNET holds a respectable 80.25% Trust Rating. While their two-year-old TV testing methodology (Indicators 8.1 – 8.3) could use an update, it’s helpful that it exists and that their individual reviews are supported by quantitative test data.

Here’s are some examples of these three publications showing what we’re looking for when we mention measurable test results, equipment, and units of measurement (click the “Read More” to see all screenshots):

RTINGs:

PCMag:

CNET:

This is the difference between trusted testers and CR—clear, documented testing versus vague claims.

Remember that CR subscribers are paying for these unhelpful reviews. Without these measurements, readers are left to trust CR’s word rather than seeing the hard evidence of their process.

CR’s vague reviews are a big reason for their failing TV Trust Rating. And again, this happens in most of CR’s categories, like routers.

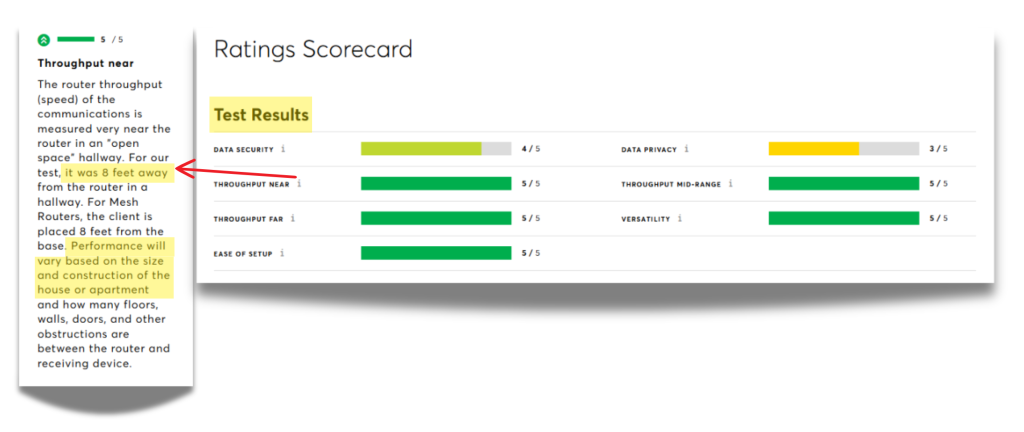

So how well do they test routers?

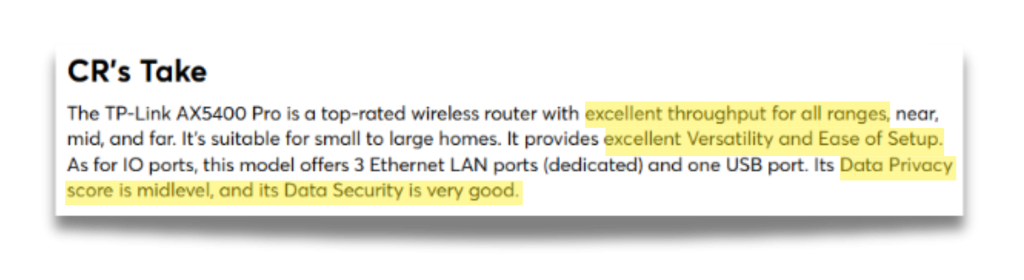

CR’s router category also earned a pretty bad 45.20% Trust Rating. In this TP-Link router review, you’ll see the same Ratings Scorecard with the basic 5-point scoring system that they call “test results”.

Like we saw with how CR handled TVs, the test results section for their router reviews follows a very similar structure.

Lots of important criteria are examined (like upload speeds, download speeds, and range, which they collectively call throughput) and supposedly tested. The product still receives these simple scores out of five per criterion. Again, the tooltips contain no useful information–just further explanation on what makes up any given test criteria without actually providing test results.

If you keep scrolling, you’ll see that the detailed test results for routers are even more anemic than they were for televisions.

There isn’t much to go off of here beyond qualitative explanations of how the router performed. There’s no information about actual download speeds, upload speeds, latency, or range testing.

As mentioned, this lack of test data doesn’t end at routers and TVs. You can view the rest of our Trust Rating analysis on Consumer Reports’ profile page.

How CR makes it look like they’re testing thoroughly

In an attempt to gain our trust, they use tactics that create a smokescreen, making their testing look more thorough than it really is. In truth, it often seems like the tests aren’t happening at all.

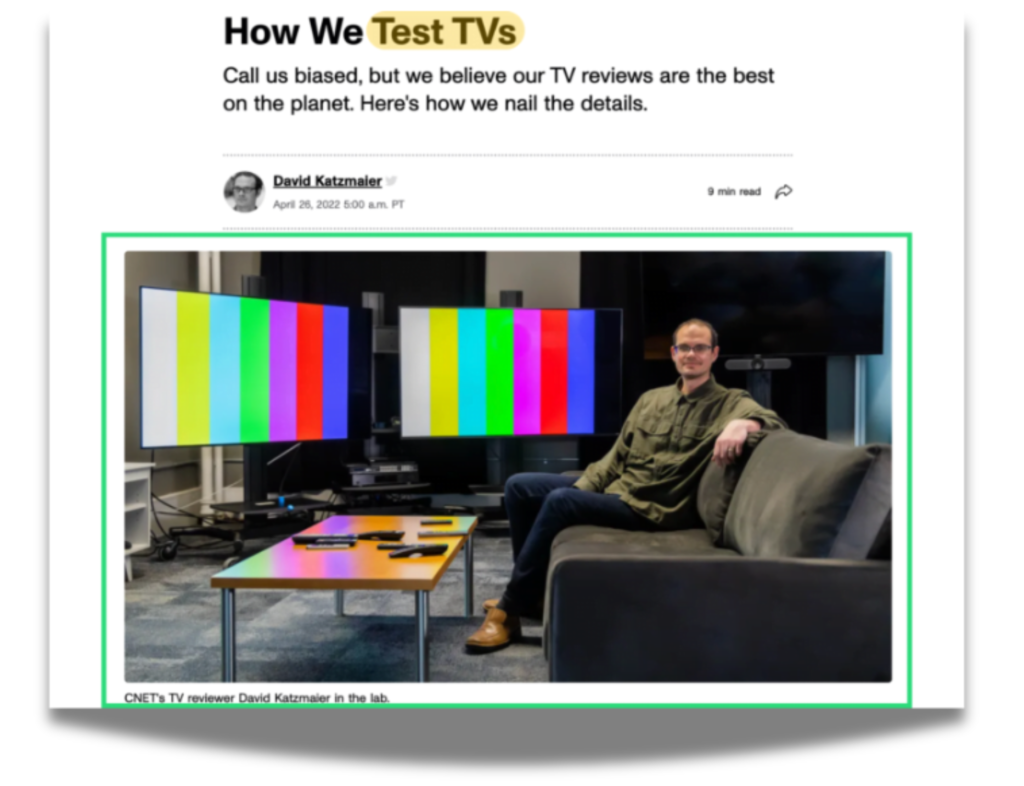

Exhibit A: CR posts “How We Test” videos, like their “How We Test TVs” segment, to showcase their lab setups and testing standards. While these videos may give the impression of rigorous testing, they’re no substitute for real results in the reviews.

This approach isn’t unique; we’ve seen similar methods on other sites that use videos and photos to build trust and subtly guide readers toward purchases. For instance, sites like BestReviews.com often display staged testing photos, but these images alone don’t confirm the use of scientific methods or proper calibration tools.

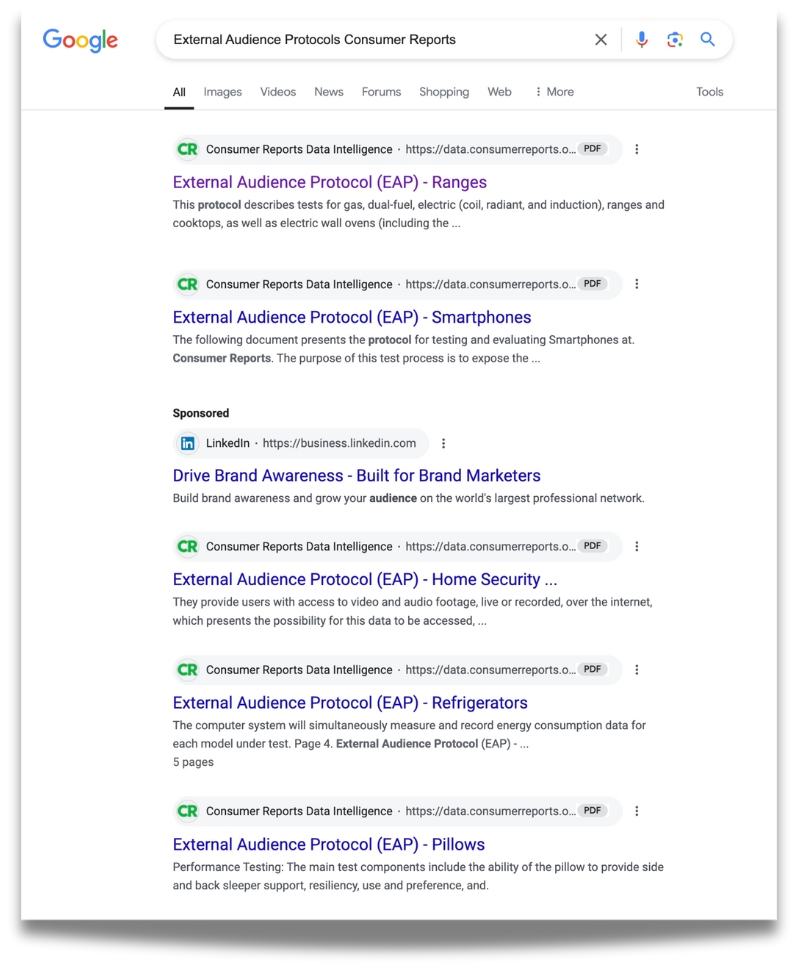

Another tactic CR does is publish these internal documents, known as “External Audience Protocols,” that detail testing processes. But as we all know now, almost none of the test data they supposedly collect is shared with the public.

A Lack of Visual Proof

Beyond the missing data and templatized reviews, Consumer Reports lack even basic visual proof of testing in the majority of the 23 categories we analyzed.

While there testing images on “how we test” pages for various categories, such as their “How We Test Refrigerators” and “How We Test Vacuums”, there are no photos or videos of the testing process on buying guides and individual reviews. This often leaves readers with no confirmation that the products were actually reviewed in-house, and why we devalue many of CR’s ratings in our True Score for a product.

This absence of transparency, combined with the templated language, weakens CR’s claim of impartial testing, relying instead on the goodwill and reputation they’ve amassed over decades.

So why is all of this such a big deal?

For readers, it means they’re left taking CR’s word for their product tests, without any way to check the facts for themselves.

Without specific test results and a proliferation of templatized reviews, readers can’t judge the quality or expertise of the reviews. They’re left wondering if anyone actually tested the products—or just wrote about them.

Instead, they’re left trusting CR’s reputation alone.

For paying members, it’s a letdown. Subscribers expect in-depth insights they can rely on. Missing data and anonymous reviews means they may not get the value they’re paying for, undermining CR’s promise of transparency.

For brands that built their reputations on trust, restoring it means getting back to basics—providing honest, thorough, and data-backed reviews.

Readers expect clear, actionable information, not recycled templates or half-hearted scores. If Consumer Reports doesn’t course-correct, they risk becoming just another voice in a crowded review space, no different from other less established sites.

Our mission has always been to hold tech journalism accountable. When established names fail to meet transparency standards, it’s a breach of trust that affects every reader making informed buying decisions. We’re here to push for change, because genuine, data-backed reviews are more than just a checkbox—they’re essential for reader confidence.

Consumer Reports has the resources to fix this. We’re calling on them to bring transparency back to their product reviews and to their readers.

Thanks to Alex Barrientos and Courtney Fraher for editing and performing additional research.