High-quality computer monitors are a must-have for any modern PC workstation. Consumers have been using these displays for decades, but the way they work may be a bit arcane to some users. Understanding the function of a computer monitor can come in handy for anyone, especially to better understand the options available when adjusting and calibrating display settings.

Key Takeaways_

- Computer monitors output visual information relayed via a PC’s video card or motherboard.

- Monitors use a number of different connector types, including HDMI, DisplayPort, USB-C, DVI, and VGA.

- Many monitors ship with integrated accessories, such as cameras and microphones.

Guide To How Monitors Work

A computer monitor is a visual output device that displays information relayed to it via a computer’s video processor. This information is processed in real-time, allowing users to interact with the computer near-instantaneously. The digital signal your computer generates is passed from the graphics chip to the monitor where—generally speaking, depending on the exact technology behind the panel—the image is cast onto the screen of the monitor by applying a charge of some sort to a microscopic thin layer on the back of the screen.

- Related Post: What is a Monitor?

Display Types

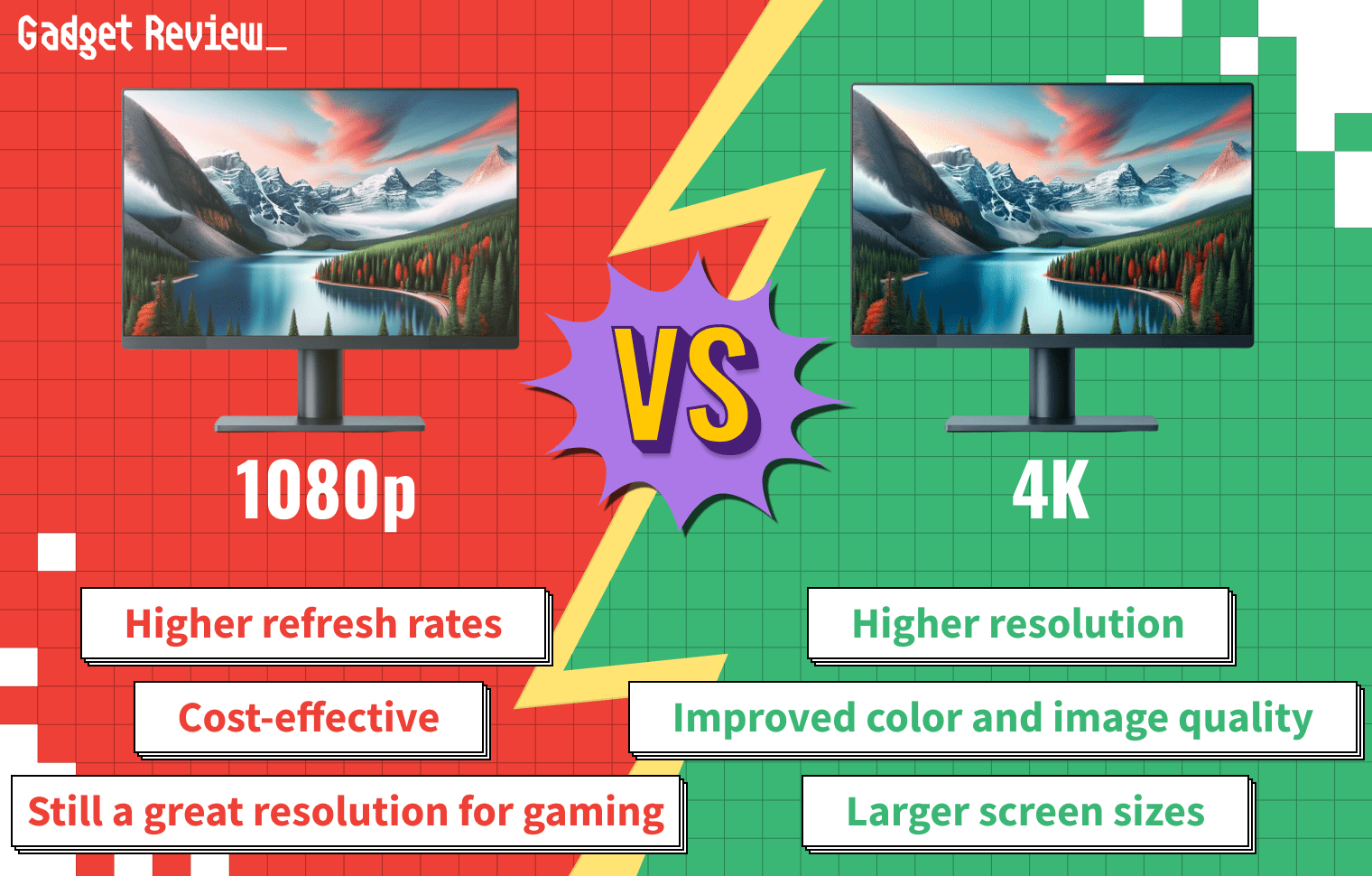

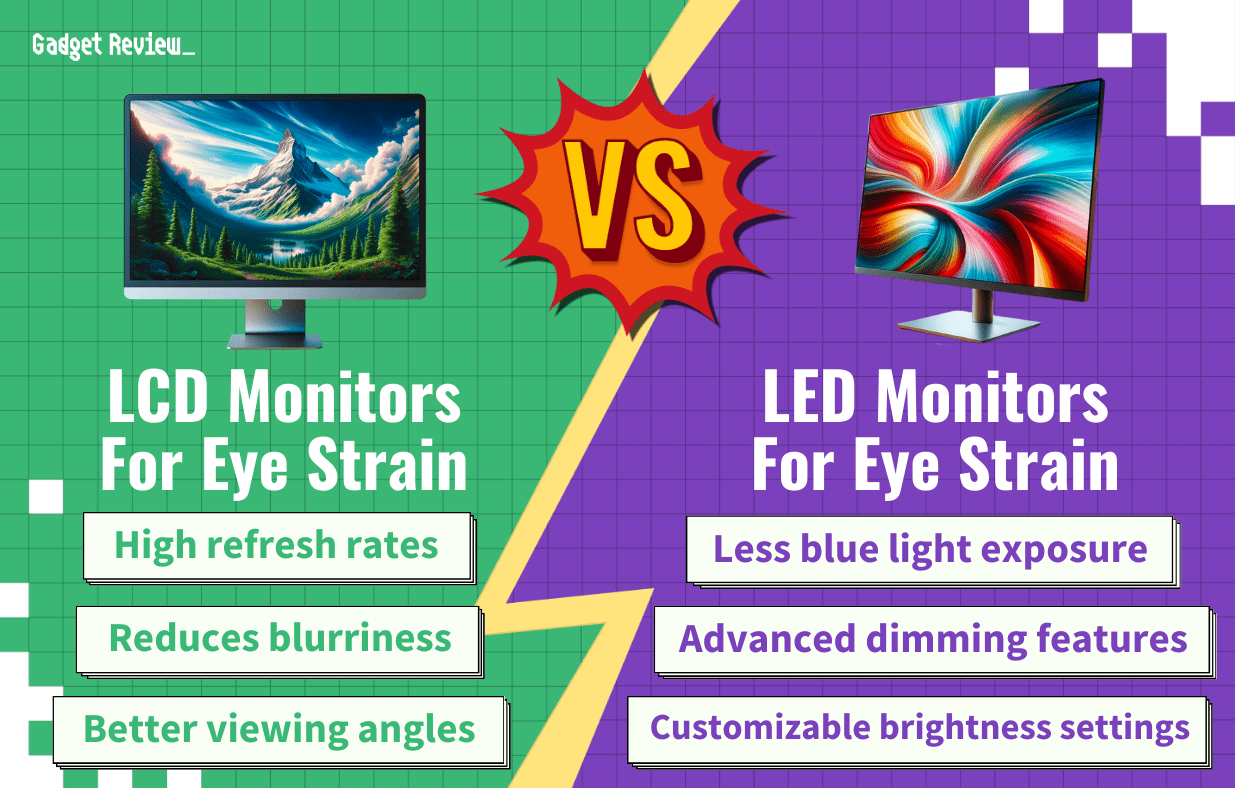

There are a variety of different kinds of monitor displays, each with its own benefits and drawbacks. Cathode ray tube (CRT) monitors are the oldest of the bunch and have fallen out of fashion in recent years. LCD displays make up the lion’s share of newly released screens, as they are cheap to manufacture and of high quality. OLED monitors (not to be confused with “LED” displays, which in truth are another type of LCD) are the newest design, with gorgeous high-contrast panels, though they tend to be on the expensive side.

On top of the display types, flat-screen monitors have a number of different panel types, referring to the particular technology with which they’re constructed. Without delving too deeply into the technical specifics, the most common types of display—shared with TV technology as well— include the following:

- Twisted-nematic (TN)

TN displays feature liquid-crystal molecules that are twisted at rest and realign themselves when they receive an electrical charge. That’s the oldest display type that kickstarted consumer LCD displays. - Vertical-alignment (VA)

In a VA display, the liquid crystals are arranged in vertical columns. When they receive a charge, they tilt, allowing a certain wavelength of light through, which determines the color of the pixel. - In-plane switching (IPS)

IPS panels arrange the liquid crystals in horizontal rows. They rotate when they receive a charge, similar to VA displays. However, the particular mechanism here grants IPS panels superior viewing angles and color accuracy over TN or VA technologies. - Organic light-emitting diode (OLED)

The newest and most advanced screen tech on the market. While other screen tech involves passing a backlight through liquid crystal, OLED pixels are self-emissive, meaning they create their own light. This gives them unmatched color accuracy, super low response time, and a near-infinite contrast ratio, since a totally black OLED pixel isn’t actually emitting any light at all.

- Related Post: How to Use Your TV as a Second Monitor

insider tip

OLED TVs are more common than OLED monitors, as OLED displays carry a risk of burn-in when displaying static elements like a computer’s taskbar or a white webpage background over long periods of time. Methods for mitigating this risk are being developed all the time, but it’s currently accepted as an inherent risk of this modern tech.

Connection Types

Monitors have different connection types, which are largely the same as you might see on a modern television set. Today, HDMI, DisplayPort and USB-C are among the most common connectors you might see. These cables transmit video, and in some cases a matching audio signal, from the input (your computer) to the output device (your monitor, in this case). Even laptops, which have these components all built together, still form these connections within their interior wiring.

Integrated Accessories

Many newly released computer monitors feature a number of integrated accessories and add-ons intended to make the overall experience more enjoyable. Some displays come with integrated microphones and built-in sound systems with stereo speakers. Others ship with integrated USB hubs, for running accessories through the monitor instead of having to use up a USB port on the computer itself. Sometimes these will function via what’s known as a passthrough, where the monitor still needs to be connected to a USB port on your PC, but will take the data and “pass it through” from the more accessible and numerous monitor ports to your PC. Others have a camera attached for live streaming and participating in video calls.

Generally speaking, monitors with numerous integrated accessories can be on the expensive side compared to those without. Only spring for these if you might actually use these extra features; otherwise, you may be able to find a more advanced display with fewer bells and whistles for a similar price.

Laptops and Desktops

While a monitor plugged into a desktop is its own device, with its own power switch, laptops offer a different type of screen design. Laptop displays are fully integrated into the computer itself. This means when you power down a laptop, the monitor also powers down, as they usually can’t operate independently of one another. Due to the clamshell nature of their design, laptop screens are at a higher risk for accidental damage than standalone monitors.